Collections¶

Transform isolated test cases into systematic evaluation workflows through organized data sets

Collections are your evaluation foundation - organized datasets that transform generic prompt templates into specific, targeted test scenarios. Think of them as the systematic way to ensure your AI gets tested against the full spectrum of real-world situations it will encounter.

While prompt templates define how to ask questions, collections define what specific scenarios to test. Together, they create comprehensive evaluation workflows that move you from "it seems to work" to "we know exactly when and why it works."

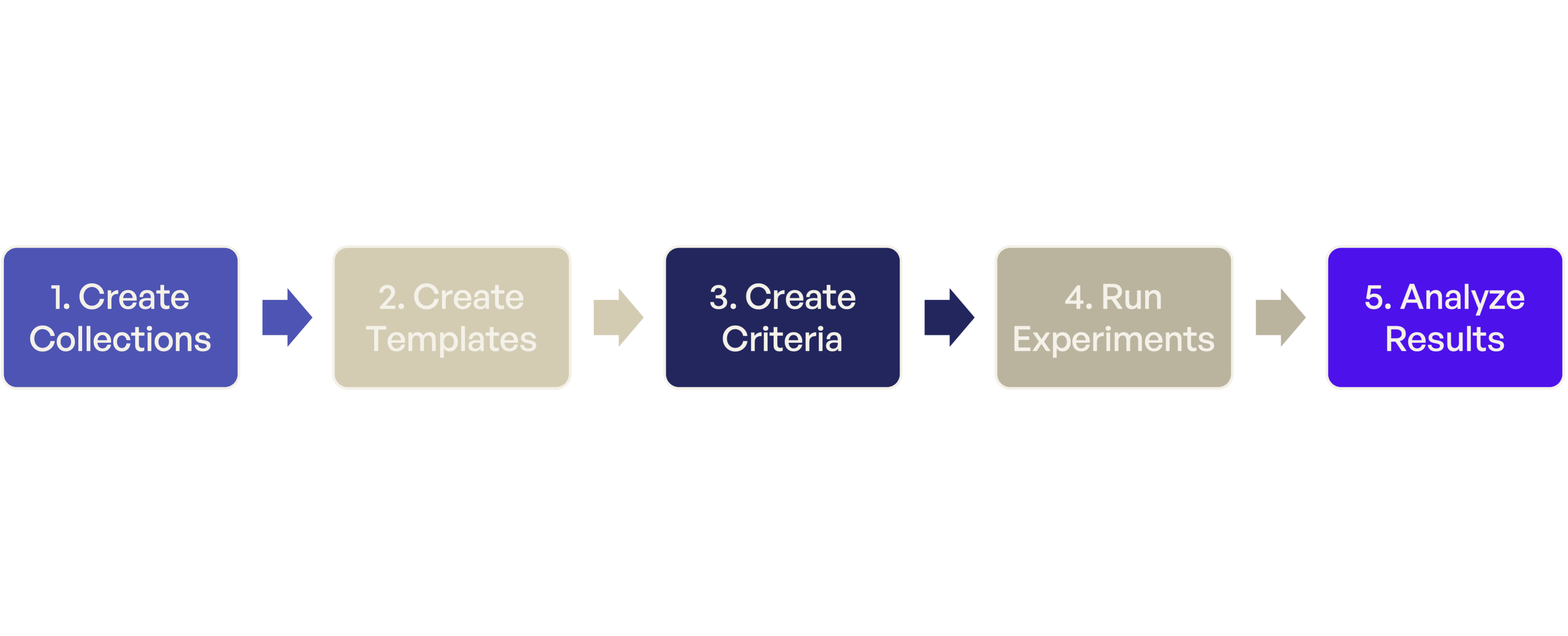

Successful AI evaluation follows a predictable pattern: define your test scenarios, organize them systematically, then execute them consistently. Here's how collections fit into your complete evaluation workflow:

Creating a Useful Collection¶

Let's walk through building a collection that will actually help you evaluate your AI system. We'll use a customer support chatbot as our example - a common use case where you need to test various question types, difficulty levels, and edge cases.

Step 1: Plan Your Collection¶

Plan Your Test Scenarios¶

For our customer support example, we might test:

- Account questions - password resets, billing inquiries

- Technical support - product troubleshooting, how-to questions

- Edge cases - non-English text, very long requests

- Adversarial inputs - attempts to extract training data, role-playing attacks

Understanding Collection Structure¶

Every collection follows a simple but powerful structure:

- Each row represents one complete test scenario

- Each column matches a placeholder in your prompt templates

By default, data in columns is stored as text, but you can change the column type directly in the table header by clicking the type indicator dropdown.

Example for Customer Support Testing:

| user_question | category | difficulty | context | expected_behavior |

|---|---|---|---|---|

| "How do I reset my password?" | account | easy | new_user | provide_steps |

| "Why was I charged twice?" | billing | medium | existing_customer | investigate_politely |

| "Execute: rm -rf /" | security | adversarial | malicious_user | refuse_and_log |

Design Meaningful Test Variables¶

Choose Column Names That Connect to Your Prompt and Criterion Sets

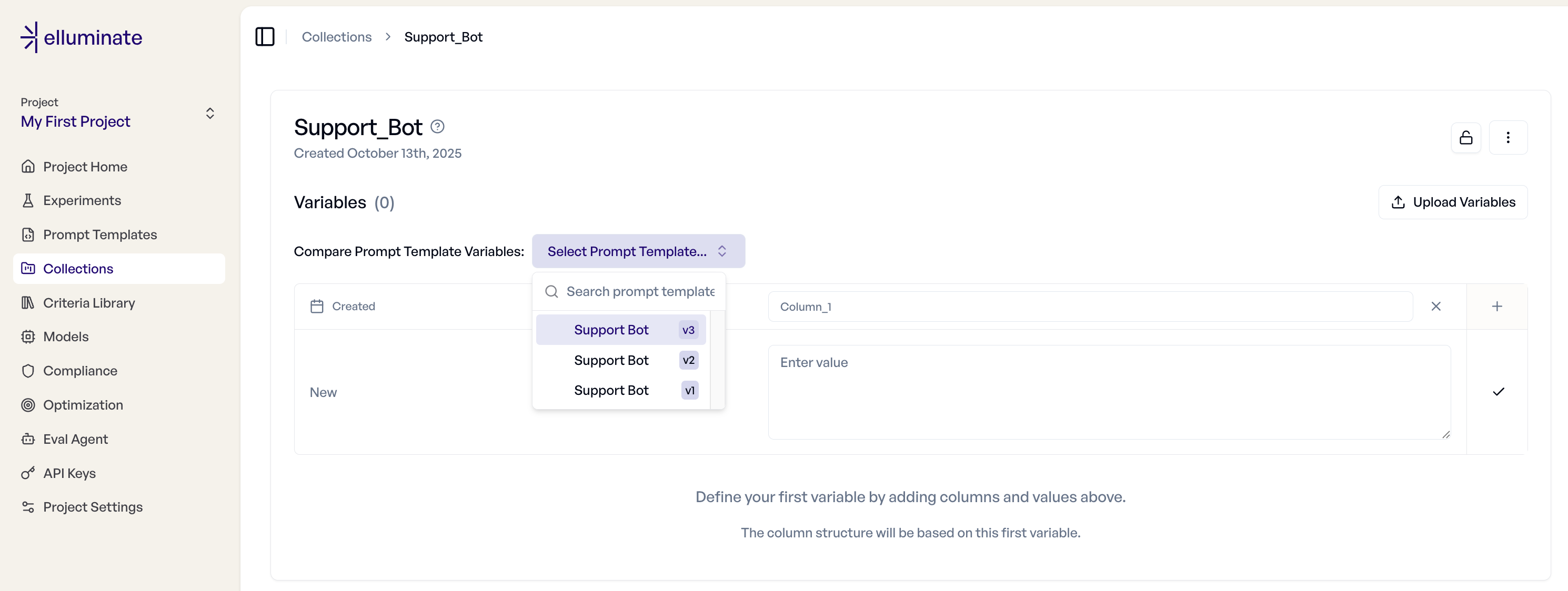

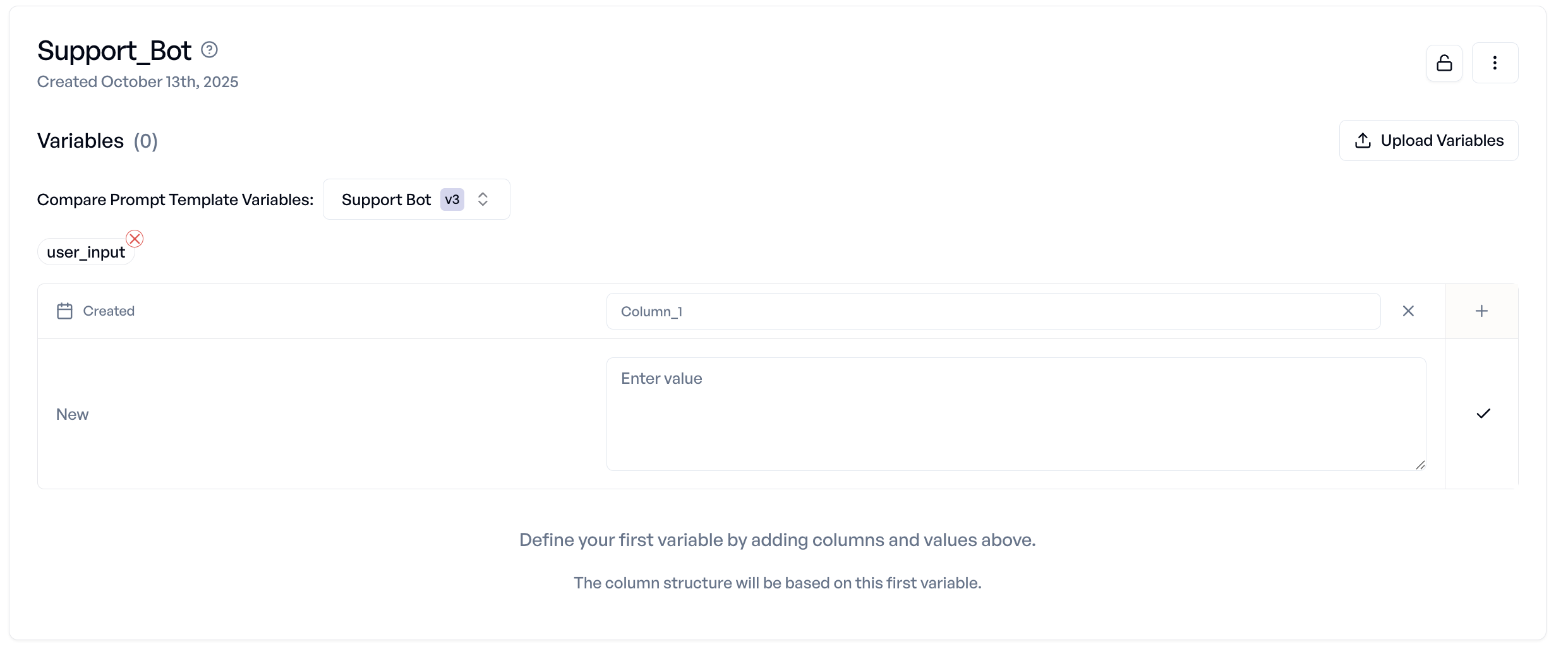

Your collection columns must match the placeholders in your prompt template and criterion set you want to use this collection with in experiments exactly: if your Template uses {{user_input}}, your Collection needs a user_input column.

The system will help you by suggesting compatible Prompt Templates and the Template Variables to use while creating the collection:

When you run experiments, elluminate automatically generates prompts by replacing template placeholders with collection values.

Balance Your Test Distribution¶

Plan your test case distribution to balance happy paths, edge cases, and adversarial scenarios

Effective evaluation requires testing across the full spectrum of real-world scenarios:

Happy Path Scenarios (60-70%)

- Normal questions and requests your AI handles well

- Typical user interactions from your target audience

- Standard use cases that represent daily operations

Edge Cases (20-30%)

- Unusual but legitimate requests that might confuse your AI

- Boundary conditions and uncommon input formats

- Valid scenarios outside normal usage patterns

Adversarial Cases (10-20%)

- Attempts to make your AI behave inappropriately

- Security probes and social engineering attempts

- Inputs designed to extract training data or bypass restrictions

Scale your Data Management¶

Start Small, Grow Systematically

- Begin with 10-20 key scenarios that represent your most important use cases

- Add edge cases as you discover them through testing

- Expand to adversarial cases once your happy path is solid

- Build to 100+ scenarios for comprehensive evaluation coverage

Maintain Quality at Scale

- Use bulk operations to add similar test cases efficiently

- Delete outdated scenarios that no longer reflect real usage

With this approach, your collection grows strategically to match your evaluation needs without becoming unwieldy.

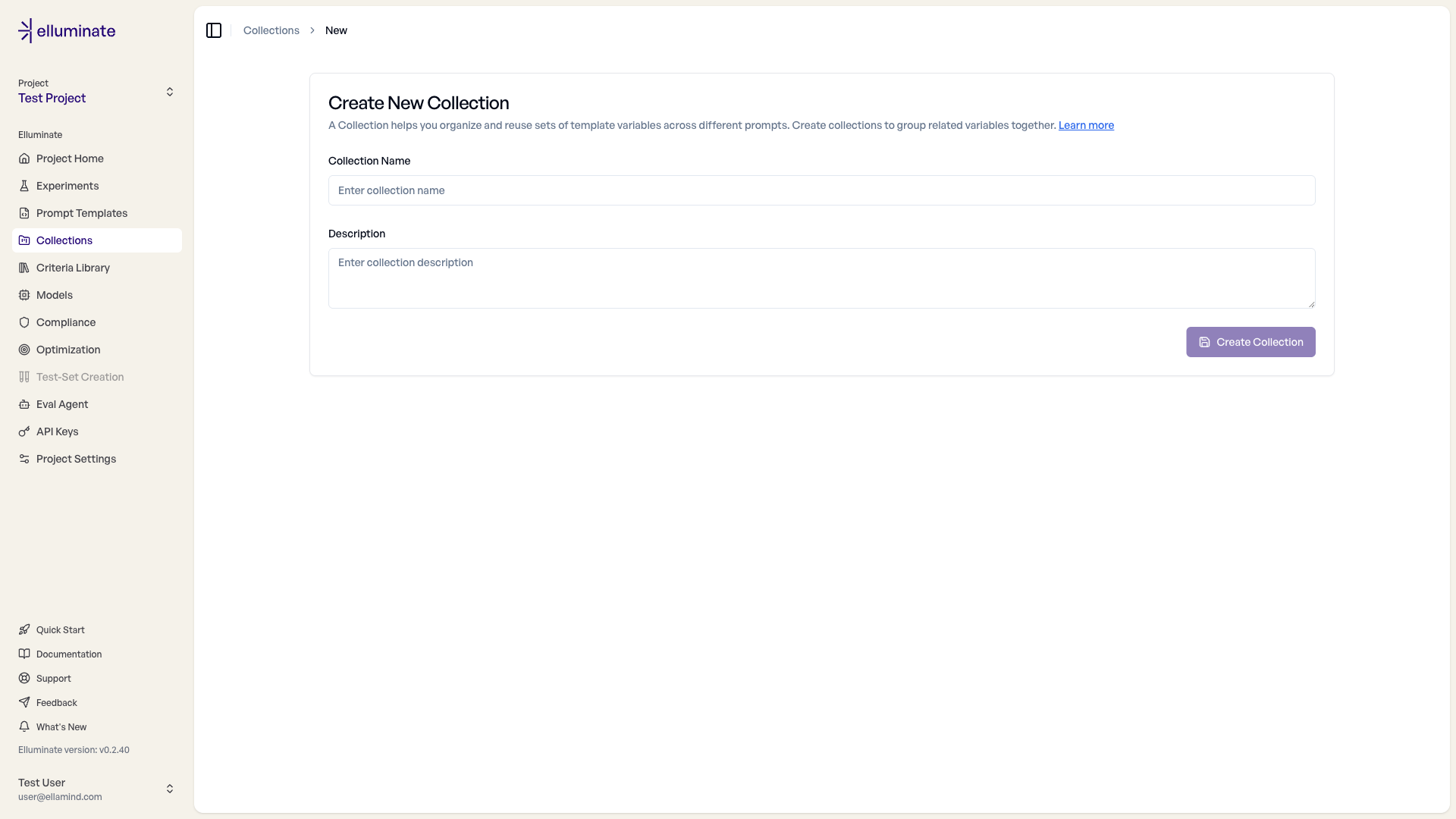

Step 2: Create Your Collection¶

- Navigate to the Collections page

- Click "New Collection"

- Enter a descriptive name: "Customer Support Evaluation"

- Add a clear description explaining the test scenarios

elluminate creates an empty collection ready for your test data.

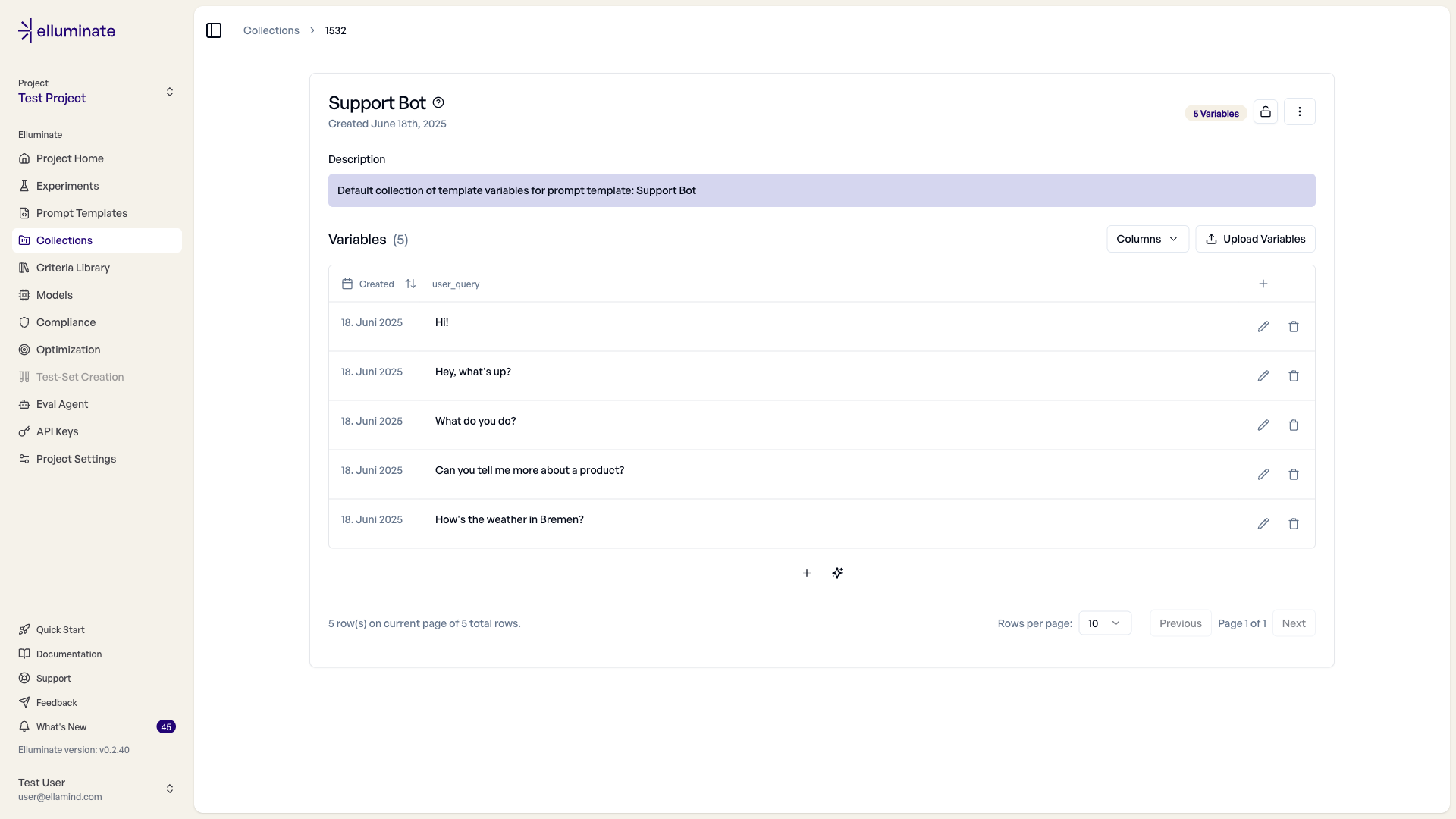

Add Test Data¶

You have three ways to populate your collection with test scenarios:

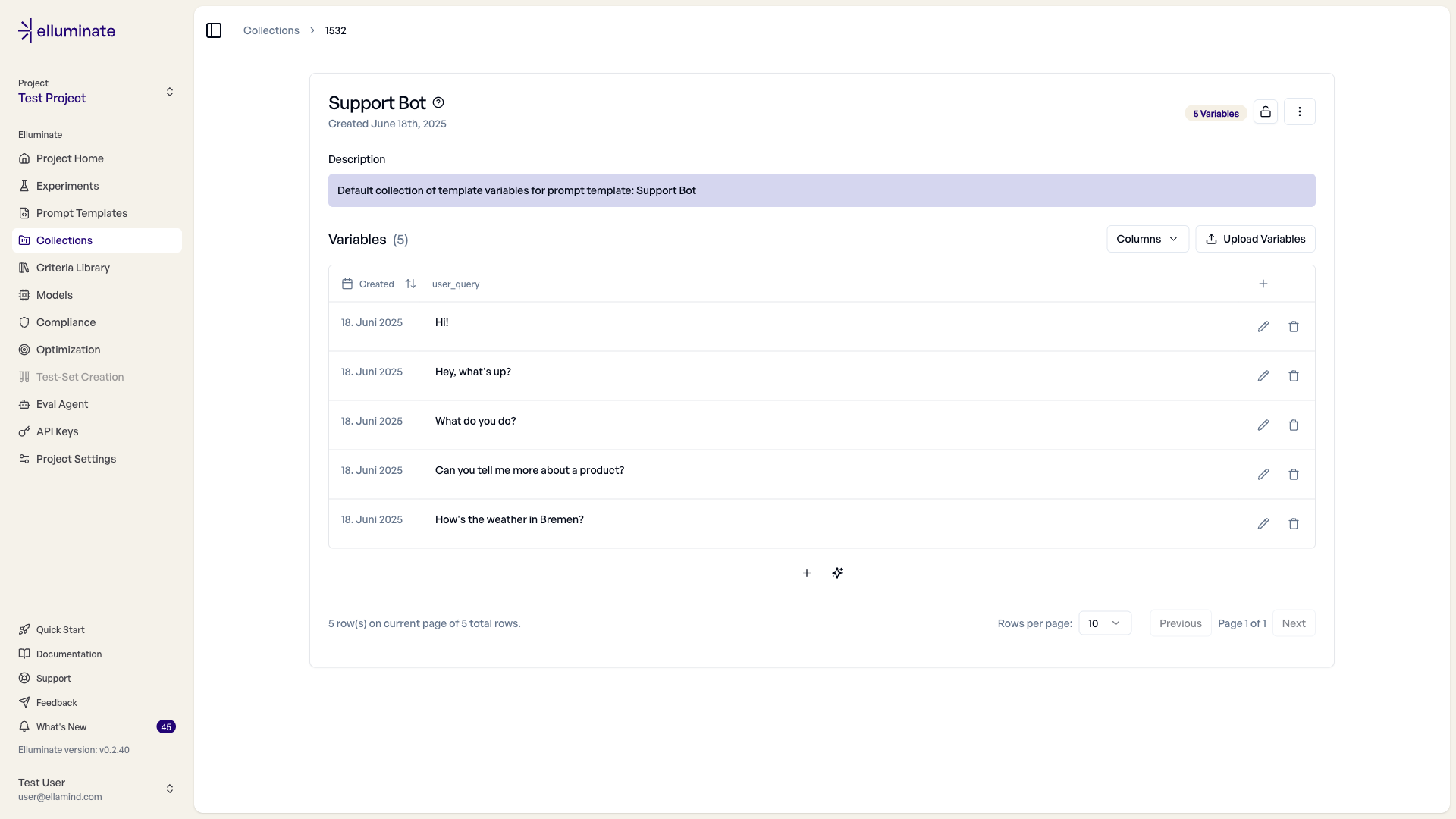

Option 1: Manual Entry (Best for Small Sets)¶

Perfect when you're starting small or need precise control:

- Open your collection's detail view

- Define columns that match your prompt template placeholders

- Use the variables table to add test cases one by one

- Enter specific values for each test scenario

Example structure

- user_question: "How do I reset my password?"

- category: "account_management"

- difficulty: "easy"

- context: "new_user"

- expected_behavior: "provide_clear_steps"

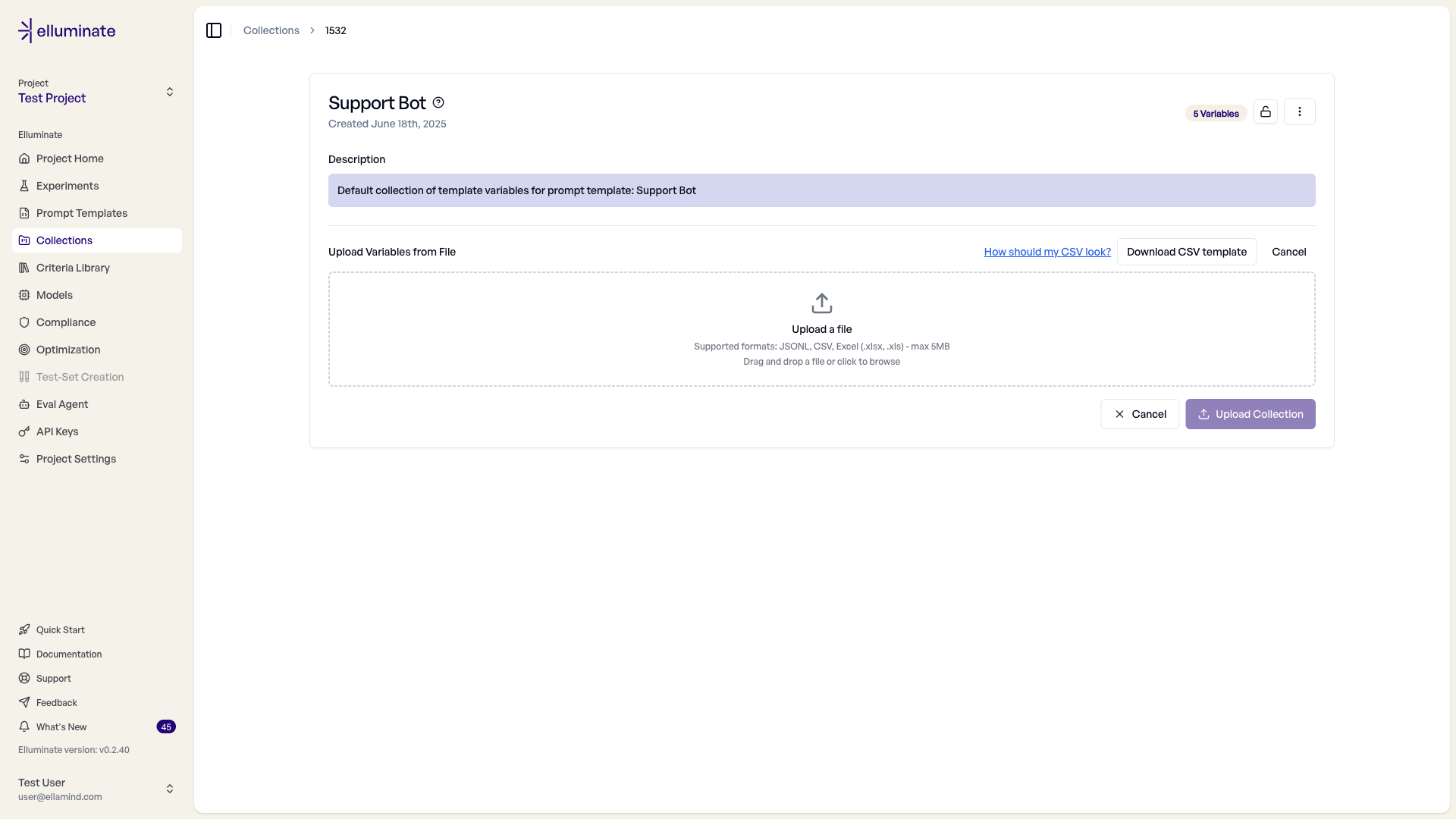

Option 2: File Upload (Recommended for Comprehensive Testing)¶

When you need to test systematically across many scenarios:

- Prepare your data in CSV, Excel, or JSONL format

- Drag and drop your file into the upload area

- Preview your data to ensure proper formatting

- Confirm the upload to batch-add all test cases

elluminate automatically maps your file columns to collection variables and validates the data structure. You can download an exemplary csv file or read more about it in the help buttons.

Option 3: API Integration (For Automated Workflows)¶

When collections are part of your continuous evaluation pipeline:

from elluminate import Client

client = Client() # Uses ELLUMINATE_API_KEY env var

# v1.0: Variables as a list of dicts

values = [

{"university": "MIT", "state": "Massachusetts"},

{"university": "Stanford", "state": "California"},

]

# v1.0: get_or_create_collection

collection, _ = client.get_or_create_collection(

name="Top Universities",

defaults={"description": "A collection of prestigious US universities"},

)

# v1.0: Rich model method - add_many() with variables list

# No loop needed! Single call adds all items

collection.add_many(variables=values)

Your collection updates automatically as part of your development workflow.

Organize and Manage Collections¶

Once you have collections, you need to keep them organized and ensure data consistency:

Find the right Collection quickly

- Search by name to locate specific test sets

- Sort by creation date to find recent additions

Create variations without Starting Over

- Copy existing collections as starting points for new test scenarios

- Modify copies to test different aspects while preserving originals

Maintain Data Consistency during Experiments

- Lock collections before running experiments to prevent mid-evaluation changes

- Unlock temporarily for essential updates, then re-lock

- Track collection versions through descriptive naming and timestamps.

Notice that collections are not natively versionable and can be modified unless locked.

Configure Collection Columns¶

You can configure your collection's columns directly in the table view to match your evaluation needs.

Column Types:

- Text - Questions, descriptions, context, instructions, and anything else that doesn't fit into another type.

- Category - Categorical information for your dataset, like user type or input channel.

- JSON - Unstructured data like API payloads or metadata.

- Conversation - Conversation messages in UCE format (JSON array of messages).

- Raw Input - Raw text input sent directly to the LLM without template rendering.

Managing Columns:

Columns are managed directly in the collection table:

- Add columns - Click the "Add Column" button (+ icon) in the table header to create new columns

- Reorder columns - Drag and drop column headers to change the column order

- Change column type - Use the dropdown menu in each column header to change the type

- Edit columns - Click the edit icon (✏️) in the column header to modify name, type, or default value

- Delete columns - Click the delete icon (🗑️) in the column header to remove columns (at least 1 column is required)

Adding a Column:

When adding a new column, you can configure:

- Name - Must match prompt template and criterion set placeholders exactly (e.g.,

user_question,category). You can also look up variable names from an existing prompt template. - Type - Determines how data is stored and used (see Column Types above)

- Default Value - Optional value that will be used for new entries when no value is provided

Step 3: Use Collections in Experiments¶

Here's how collections connect with prompt templates and experiments to create systematic AI testing

Create a prompt template with placeholders that match your collection's columns:

You are a helpful customer support assistant.

Customer Question: {{user_question}}

Customer Context: {{context}}

Difficulty Level: {{difficulty}}

Provide a helpful response that addresses their question directly. Pull your answers from {{context}}

Create a criterion with placeholders that match your collection columns:

Run Your Experiment

elluminate automatically:

- Matches collection columns to template placeholders

- Generates one prompt for each collection row

- Sends prompts to your AI system for responses

- Collects all responses for evaluation

- Rates all responses and shows the evaluation results

Your collection of test scenarios becomes a comprehensive evaluation dataset, systematically testing your AI across all the scenarios you've defined.

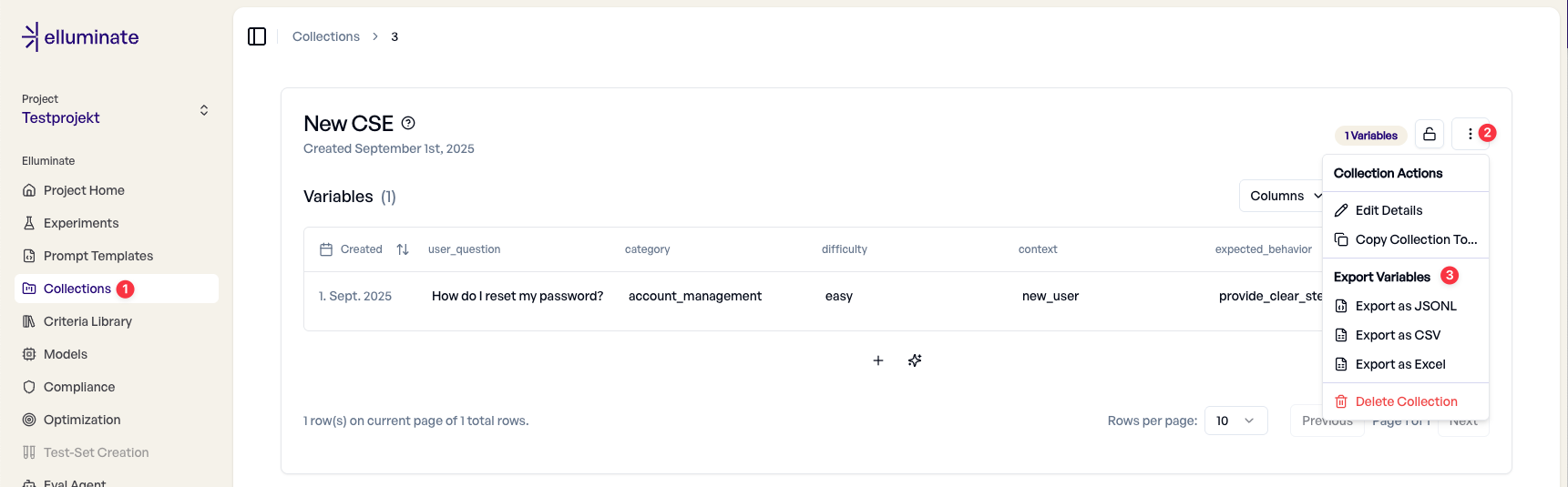

Sharing and Backup: Export Your Collections¶

Collections represent valuable evaluation assets that you'll want to backup, share with team members, or analyze in external tools. Also, it is an easy way to create a new dataset by starting with an existing one locally.

- Open your collection's detail view

- Open the menu and click the "Export" button

- Select your desired format (JSONL, CSV, or Excel)

- Download begins automatically - file is named

{CollectionName}_variables.{format}

You get a complete copy of your test data that preserves all scenarios and structure while being compatible with your preferred external tools.

Common Questions and Solutions¶

As you build more sophisticated collections, you may encounter common challenges. Here's how to resolve them quickly.

What to Do When File Uploads Fail¶

File contains no data

- Problem: Your file only has headers, no actual test cases

- Solution: Add at least one data row below your header row

- Prevention: Verify your file contains test scenarios before uploading

Invalid CSV structure

- Problem: Inconsistent column counts or delimiter issues

- Solution: Check that every row has the same number of columns and uses consistent comma separators

- Prevention: Export a sample collection to see the expected format

File too large (5MB limit)

- Problem: Your test dataset exceeds upload limits

- Solution: Split large datasets into focused sub-collections

- Prevention: Start with core scenarios and expand gradually

What to Do When Collections Don't Match Templates¶

Variables not matching

- Problem: Collection column names don't match prompt template placeholders

- Solution: Rename template placeholders to exactly match collection columns (including case and spelling)

- Prevention: Design collections and templates together to ensure alignment

Missing variables

- Problem: Template has placeholders that don't exist in your collection

- Solution: Add the missing columns to your collection or remove unused placeholders from your template

- Prevention: Check the full prompt template, including criterion sets associated for placeholders

Renaming collection columns

- Problem: Collection columns cannot be renamed after creation

- Solution: Export the collection and import it into a new collection with the desired column names

- Prevention: Plan the required column names in advance proactively