Ratings¶

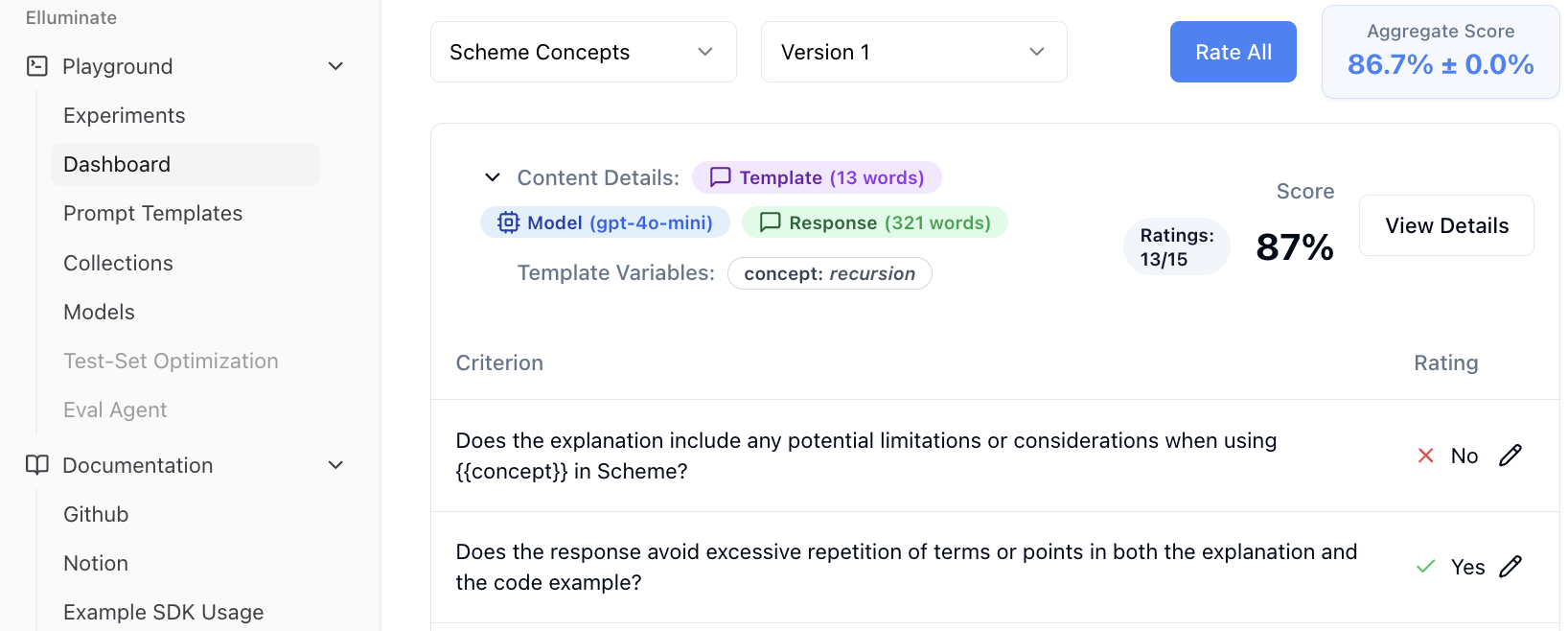

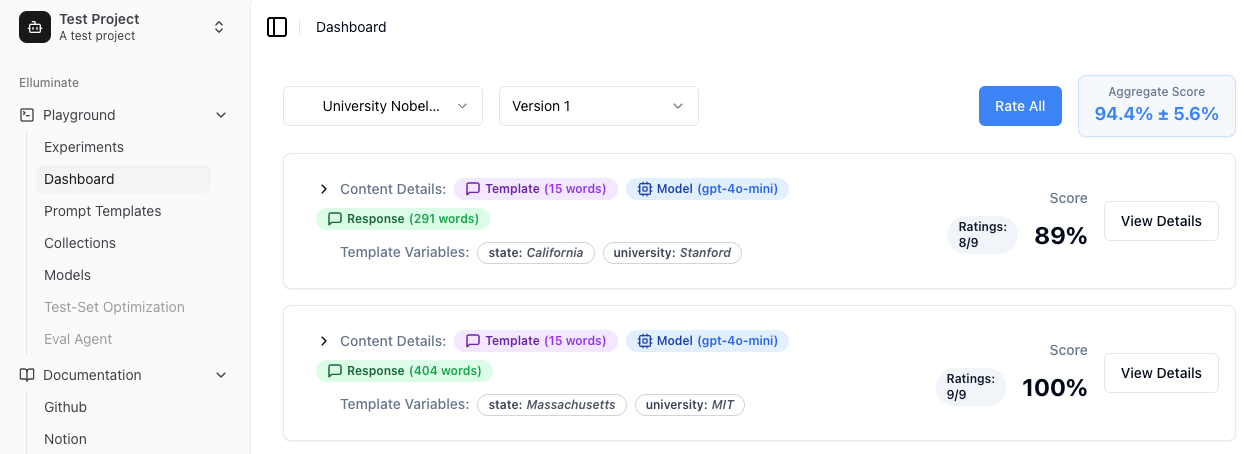

A rating is a way to evaluate the quality of a response to a prompt. Ratings are created by comparing the response to a criterion. The dashboard displays rated responses with detailed metrics and allows for interactive analysis of the evaluation results. A typical rating entry looks like this.

Here you can see:

- Overall score aggregated from individual criteria ratings

- Individual ratings for each evaluation criterion

- LLM configuration details used to generate the response

- Word count statistics for both the template and generated response

If for any reason the rating of individual criteria should not be correct, the user can make manual improvements here.

Rate with generated criteria and responses¶

In the Quick Start, we show you how you can run a simple rating of multiple criteria for one response. In this example the criteria as well as the responses are generated automatically by Elluminate.

Useful for:

- Quick Evaluation: Evaluate responses without defining criteria manually

- Quality Generation: Generate high quality dynamic criteria based on the prompt context

- Framework Development: Explore or prototype evaluation frameworks

- AI-Powered Assessment: Leverage AI-generated criteria for comprehensive assessment

-

Initializes the Elluminate client using your configured environment variables from the setup phase.

-

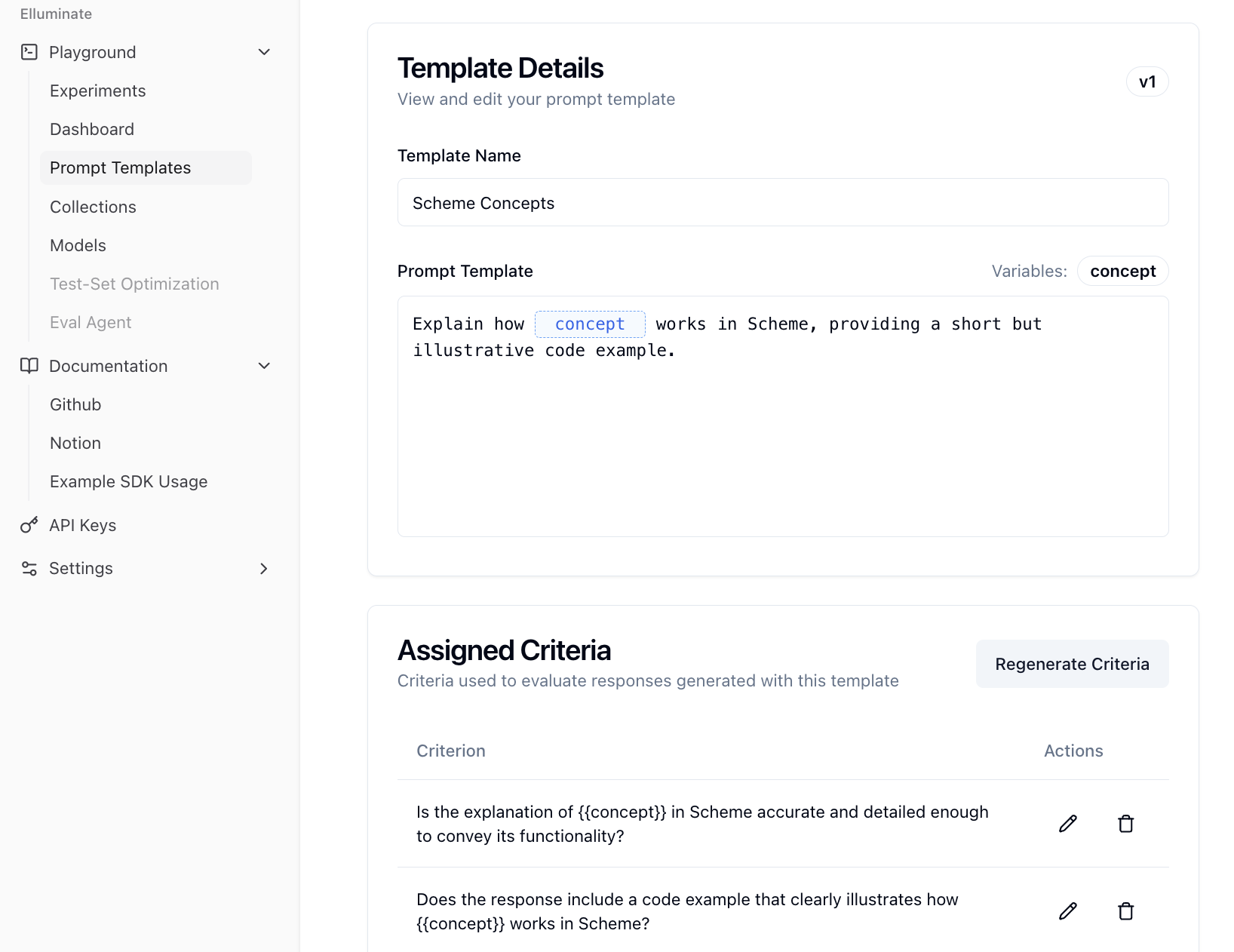

Creates a prompt template using mustache syntax, incorporating template variables (like

conceptin this example). If the template already exists, it just gets returned. -

Generates evaluation criteria automatically for your prompt template or gets the existing criteria.

-

Creates a template variables collection. This will be used to collect the template variables for a prompt template.

-

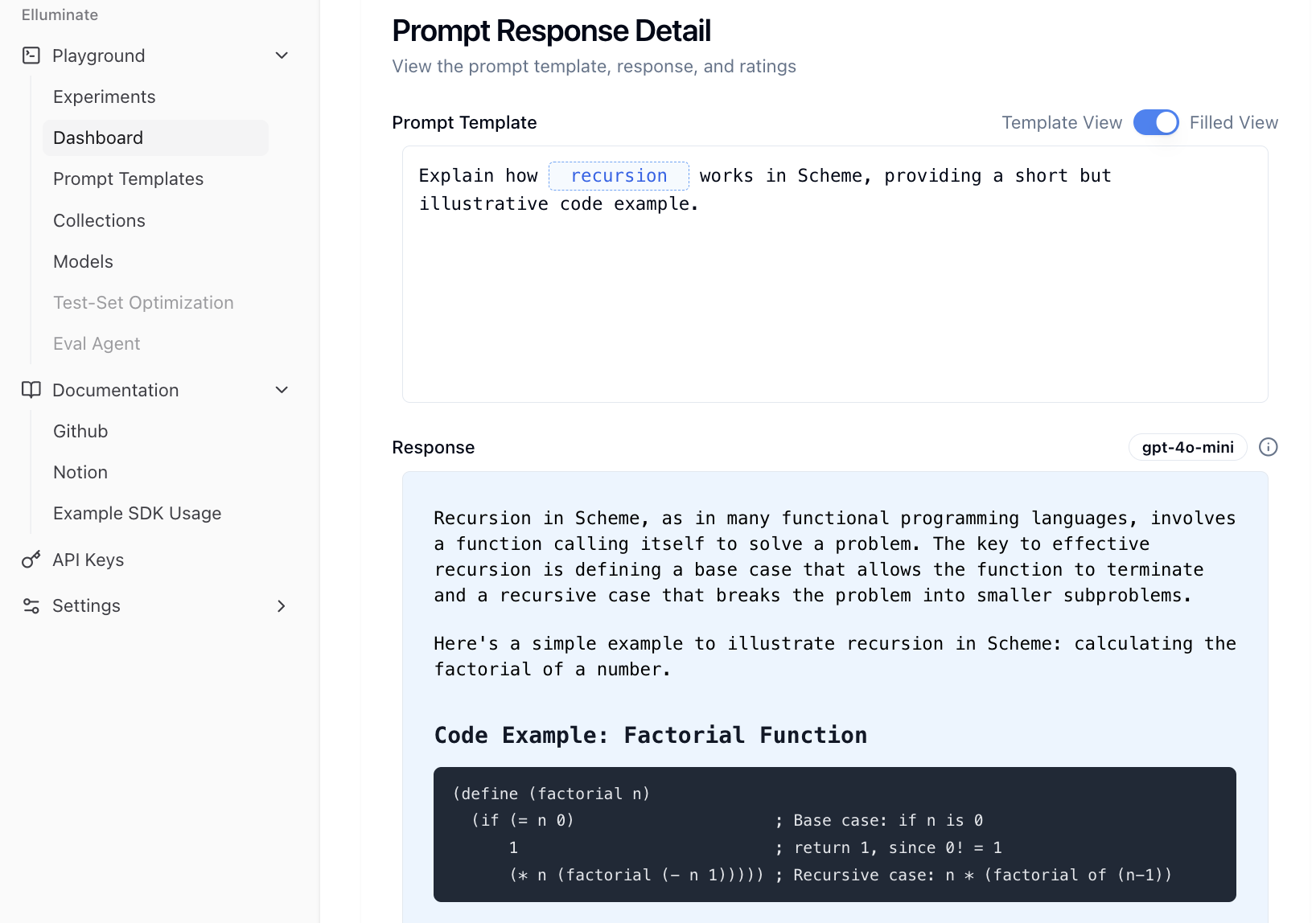

Adds a template variable to the collection. This will be used to fill in the template variable (replacing

conceptwithrecursion). -

Creates a response by using your prompt template and filling in the template variable.

-

Evaluates the response against the generated criteria, returning detailed ratings for each criterion.

The generated criteria will be associated with the prompt template and can be inspected one by one in the Template Details. Here you can also change or delete entries or completly start a new generation process.

The generated response can be viewed by clicking View Details in the Dashboard View. This opens the Prompt Response Detail View which shows the corresponding response and the associated prompt.

Rate custom criteria and responses¶

For more control over the evaluation process, you can manually specify criteria and responses. This is particularly useful when you:

- Custom Quality Standards: Define and enforce specific quality criteria tailored to your use case

- External Response Evaluation: Assess responses from any source, not limited to those generated through Elluminate

- Standardized Testing: Apply uniform evaluation criteria to maintain consistency across test suites

-

Adding custom criteria to a given prompt template. Using

delete_existing=Trueensures that any existing criteria are removed before adding the new ones. This gives you full control over what aspects of the response will be evaluated. -

Create a collection in which we will add a single template variable to inference and then evaluate.

-

Manually adding the response with some respective metadata. The response is associated with:

- The prompt template

- Template variables (to know which prompt generated it)

- The experiment (to aggregate ratings in one place)

- LLM configuration metadata (to track which model and settings were used)

Rate a set of Prompts¶

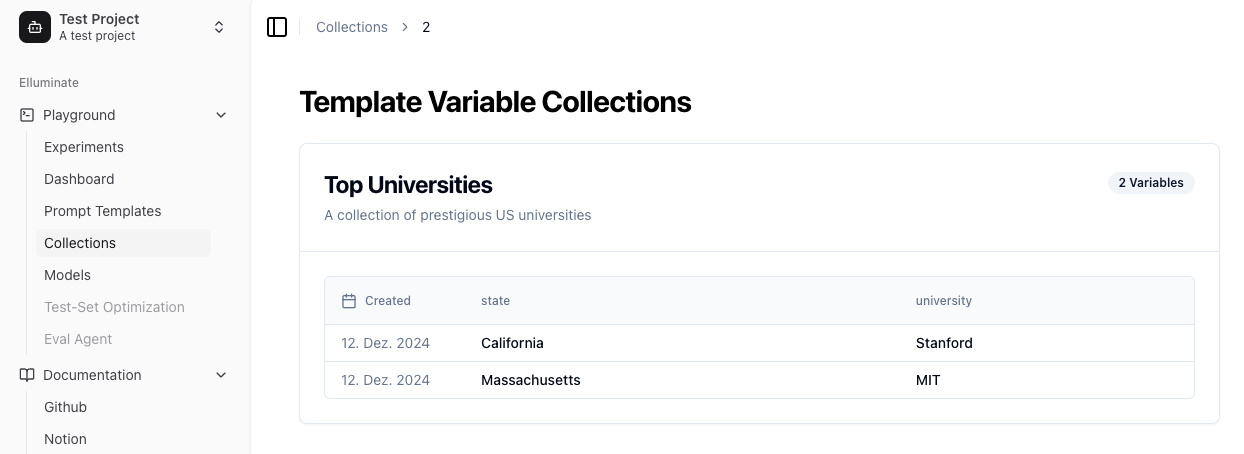

When you have multiple variations of template variables to test, you can use a TemplateVariablesCollection (or simply collection) to organize and evaluate them together. This is essential for systematic evaluation of prompt performance across different inputs.

This approach is particularly powerful when you need to:

- Input Testing: Evaluate how well your prompt performs with diverse test cases and edge cases

- Performance Analysis: Uncover trends and patterns in how your prompt responds to different inputs

- Iterative Optimization: Use insights from testing to refine and enhance your prompt template

- Define your test cases as template variables

- Create a collection to store your test cases

- Add each set of variables to the collection

- The prompt template has to be compatible with your variables

The Collections view provides an organized overview of your template variable sets. Each collection is identified by its name and contains multiple entries, with timestamps showing when they were added.

When you go to the Dashboard View you can chose the prompt template and can see the ratings for each response to all prompts generated from the collection. Here you can easily inspect how the responses perform according to a entry in the collection.