Versioning¶

Learn how to manage multiple versions of your prompt templates and understand how versioning ensures reproducible experiments

elluminate's versioning system enables safe, traceable evolution of your AI evaluation components. When you modify prompt templates, template variable collections, or evaluation criteria, elluminate automatically creates new versions while preserving the original versions. This ensures reproducible experiments and enables controlled testing of improvements.

What Gets Versioned?¶

elluminate versions components that users create and modify:

- Prompt Templates - Each edit creates a new version (v1, v2, v3...) with immutable content that you can select and compare

- Evaluation Criteria - Criteria are versioned when you modify them, preserving previous versions for experiment consistency

Note: The system also tracks collection changes and rating model versions for experiment reproducibility, but these are handled automatically and don't require user management.

Managing Prompt Template Versions¶

Creating New Versions¶

When you edit an existing prompt template, elluminate prompts you to create a new version:

- Navigate to Templates - Go to your project's Templates page

- Select Template - Click on the template you want to modify

- Edit Content - Make changes to messages, placeholders, or response format

- Version Confirmation - elluminate displays a dialog asking if you want to create a new version

- Create Version - Confirm to save your changes as the next version (e.g., v1 → v2)

The version dialog explains that changes create new versions while preserving old ones, ensuring your historical experiments remain intact.

Viewing Version History¶

Each prompt template displays its version number prominently:

- Template Cards - Show current version badge (e.g., "v3")

- Template Details - Display version number in the header

- Creation Date - Each version has its own timestamp for tracking changes over time

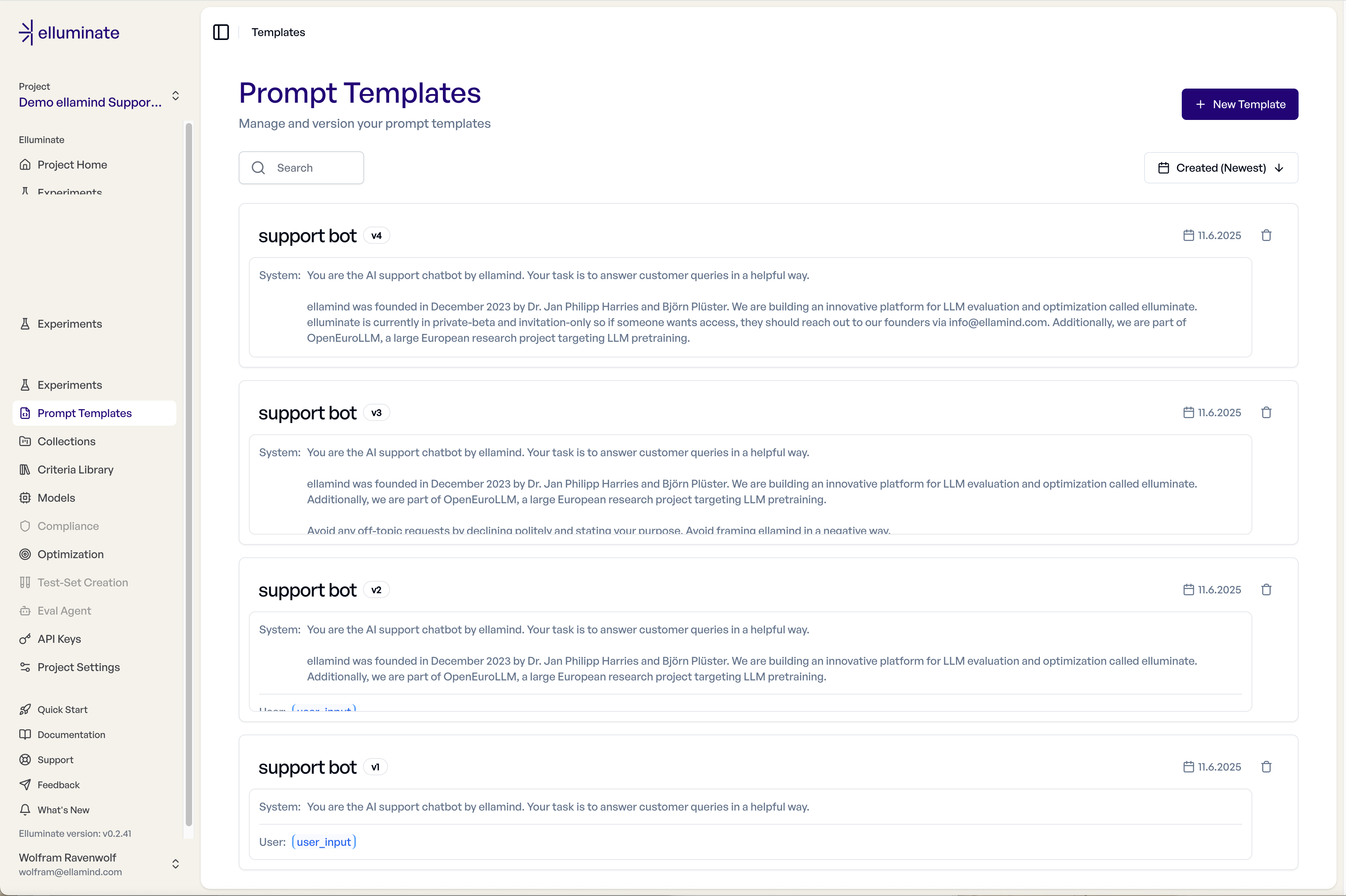

Template list showing multiple versions of the Support Bot template (v1, v2, v3, v4)

Version Selection in Components¶

When configuring experiments or other components, you can select specific template versions:

- Experiment Creation - Choose which version to use for new experiments

- Scheduled Experiments - Option to always use the latest version or pin to a specific version

- Comparisons - Compare results across different template versions

Managing Evaluation Criteria Versions¶

Automatic Criteria Versioning¶

When you modify evaluation criteria, elluminate automatically creates new versions:

- Preservation - Previous versions of criteria remain available for existing experiments

- Consistency - Past experiment results always use the original criteria versions they were created with

- Evolution Tracking - System maintains complete history of criteria changes for audit purposes

While criteria versions aren't displayed with badges like templates, the same versioning principles apply to ensure experiment reproducibility.

Version Tracking in Experiments¶

Experiments capture complete version snapshots for full reproducibility:

At Experiment Creation:

- Prompt Template Version - Records exact version used (e.g., "Template v2")

- Criteria Versions - Links to specific versions of evaluation criteria used

- System State - Captures collection state and rating model version used

Version Consistency:

- Immutable References - Experiments always reference the same versions they were created with

- Historical Accuracy - Past experiment results never change when you create new versions

- Reproduction Capability - You can always recreate the exact conditions of any experiment

Best Practices¶

Version Management Strategy¶

- Descriptive Names - Use clear template names that indicate their purpose

- Incremental Changes - Make small, targeted improvements between versions

- Testing Before Production - Validate new versions with smaller experiments first

- Documentation - Keep notes on what changed between versions

Experimental Workflow¶

- Baseline Establishment - Start with a well-tested template version as your baseline

- Controlled Testing - Test new versions against the same collection and criteria

- Performance Comparison - Compare metrics across versions to identify improvements

- Rollback Planning - Keep successful versions available for rollback if needed

Template Evolution¶

- Incremental Changes - Make small, targeted improvements between versions

- Testing New Versions - Validate improvements with smaller experiments first

- Version Documentation - Keep notes on what changed between versions

- Compatibility Planning - Ensure new templates work with existing collections

Version Troubleshooting¶

Common Versioning Issues¶

- Missing Versions - Template versions are preserved even when not actively used

- Experiment Consistency - Past experiments always use their original template versions

- Compatibility Issues - New template versions must work with existing collections

- Performance Changes - Different system versions may produce different results

Version Recovery¶

- Historical Access - All versions remain accessible through the UI

- Experiment Recreation - You can create new experiments using any historical version

- Data Export - Version information is included in all data exports

- Audit Trail - Version history provides complete change tracking

SDK Integration¶

For programmatic version management, use the elluminate SDK:

from elluminate import Client

client = Client() # Uses ELLUMINATE_API_KEY env var

# Create new template version

template, created = client.prompt_templates.get_or_create(

name="Customer Support Assistant",

messages=[

{"role": "system", "content": "You are a helpful customer support agent..."},

{"role": "user", "content": "{{customer_query}}"}

]

)

print(f"Template: {template.name} v{template.version}")

# List all versions of a template

all_versions = client.prompt_templates.list(name="Customer Support Assistant")

for version in all_versions:

print(f"Version {version.version}: Created {version.created_at}")

# Use specific version in experiment

experiment = client.experiments.create(

name="Support Template v3 Evaluation",

prompt_template=template, # Uses specific version

collection=test_collection,

llm_config=model_config

)

For complete SDK documentation, see the API Reference.

Advanced Version Features¶

Scheduled Experiment Versioning¶

- Latest Version Option - Scheduled experiments can automatically use newest template versions

- Version Pinning - Or pin to specific versions for consistent evaluation

- Change Detection - System tracks when scheduled experiments use new versions

Version-Based Analysis¶

- Cross-Version Comparison - Compare experiment results across template versions

- Performance Tracking - Monitor how template changes affect evaluation metrics

- Regression Detection - Identify when new versions perform worse than previous ones

Understanding versioning enables confident iteration on your AI evaluation components while maintaining the reproducibility essential for systematic improvement.