LLM Configs¶

Connect and configure language models from popular providers or your custom AI applications for comprehensive testing

Overview¶

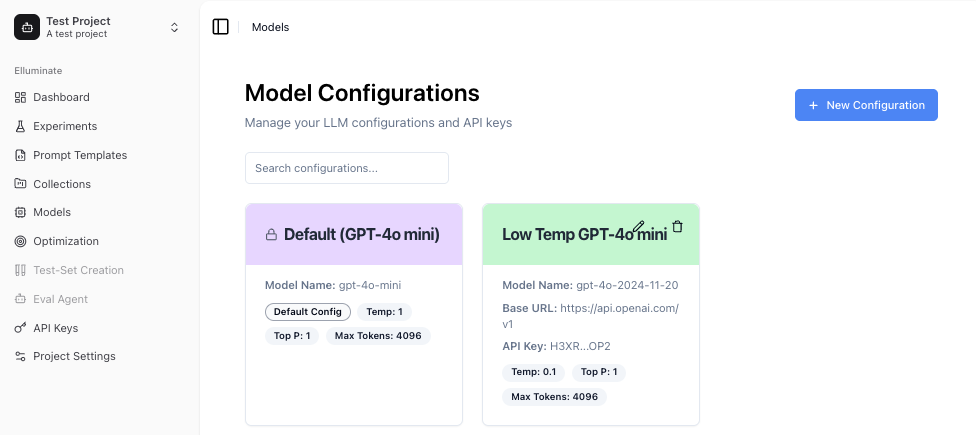

elluminate offers some LLM Models configured by default and allows you to connect any further language model to your projects - from popular providers like OpenAI to your own custom AI applications. This flexibility enables you to test prompts across different models, monitor your deployed AI systems, and optimize for cost and performance.

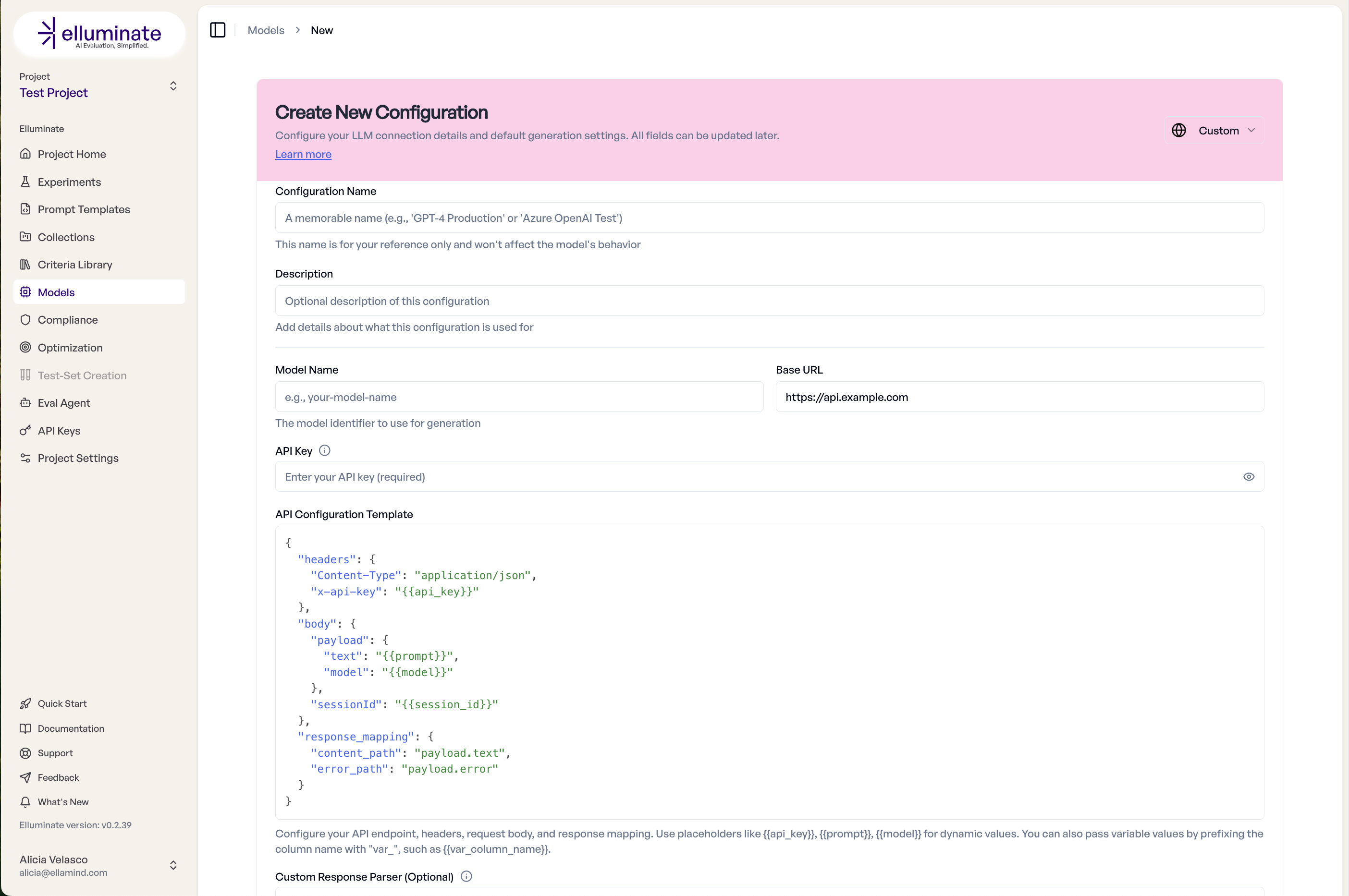

Create New Configuration¶

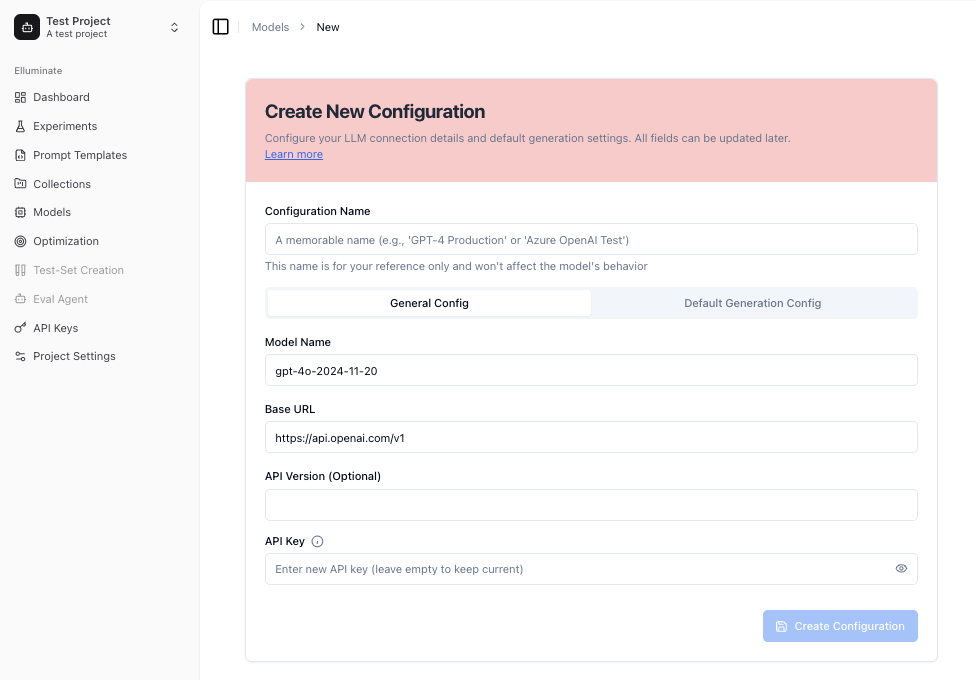

Creating a new configuration allows you to modify several parameters of the LLM. The most common ones are temperature and Top p, which control the randomness and diversity of the model’s output. For a standard configuration, you may use the provider's default values by clicking the checkbox.

Max tokens and Max Connections controls help you limit the length of the responses as well as the concurrent requests for each model configuration.

For some of the newer models, you can modify the Reasoning Effort too.

Using a different Base URL and API Key will route your connection over your prefered path.

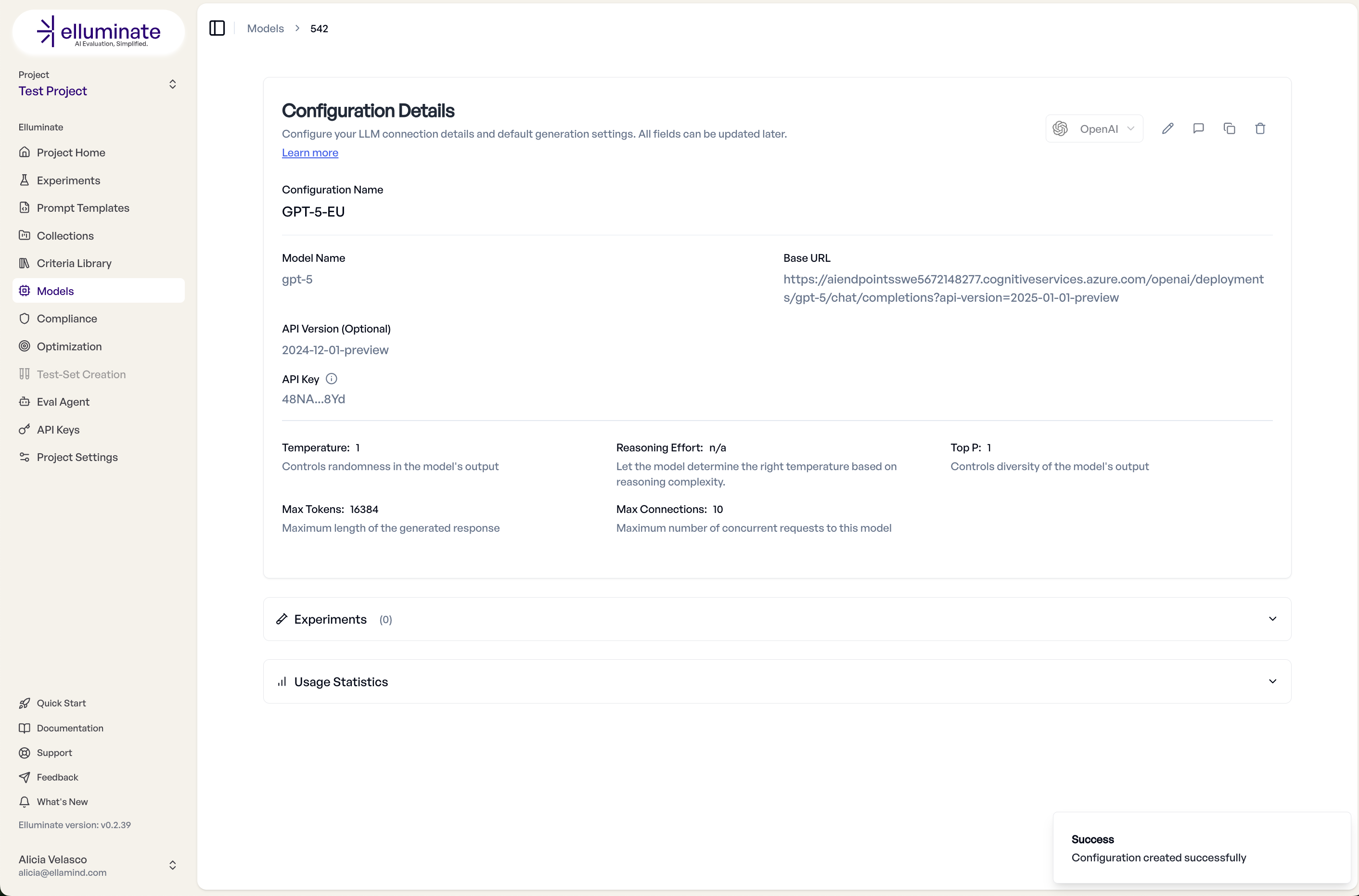

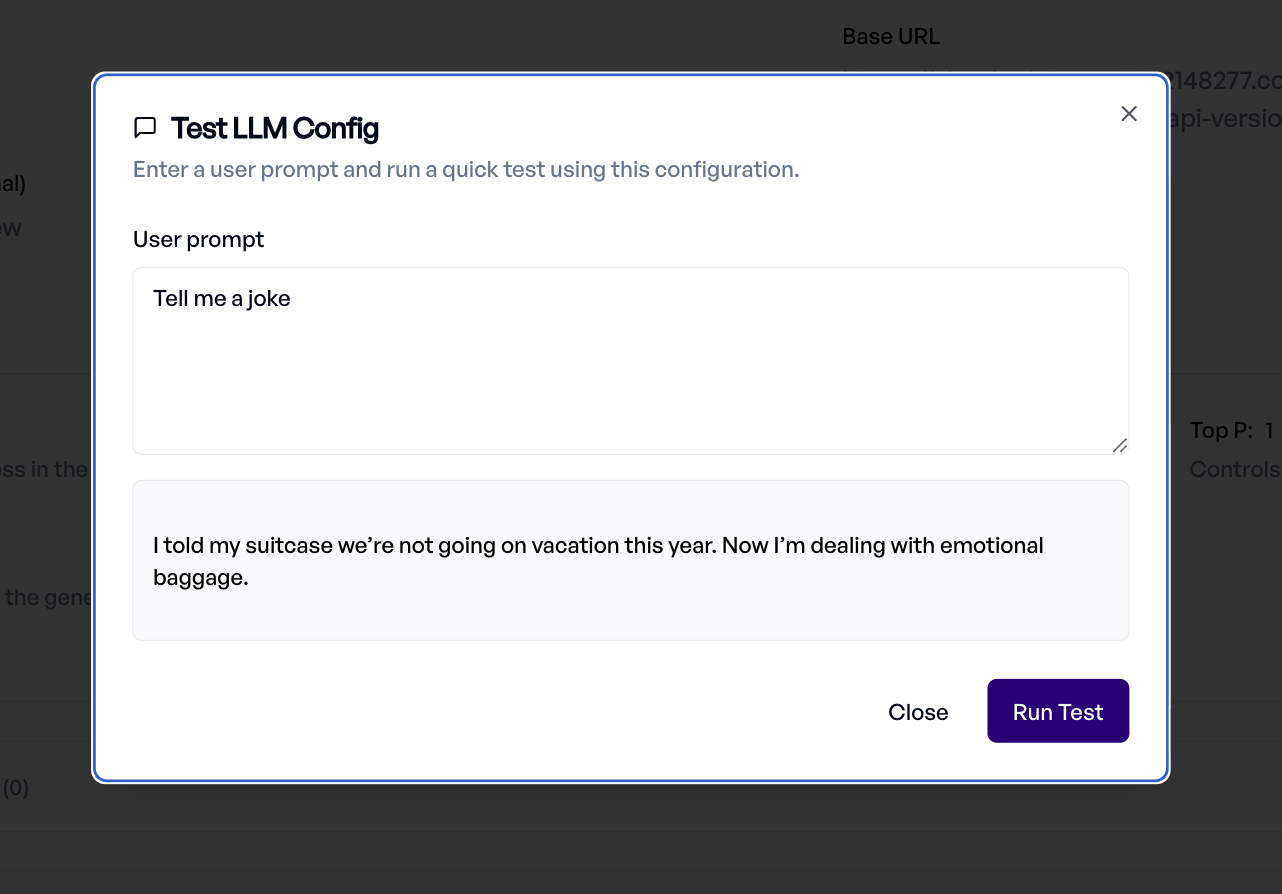

Once the new configuration is ready, you can test it directly from the same screen using the Test Configuration button.

Custom API Endpoints¶

The Custom API Endpoint allows you to configure access to your AI application using headers, request body and response mapping. Once configured, your custom model can be used in any experiment within the project.

SDK Guide for LLM Configuration¶

Environment-Based Configuration¶

# Load from environment

production_gpt_config = client.get_or_create_llm_config(

name="Production GPT",

defaults={

"llm_model_name": "gpt-4o",

"api_key": os.getenv("OPENAI_API_KEY", ""), # From .env file

"inference_type": InferenceType.OPENAI,

},

)

Performance Parameters Configuration¶

performance_config = client.get_or_create_llm_config(

name="Fast Response Model",

defaults={

"llm_model_name": "gpt-4o-mini",

"api_key": "key",

"inference_type": InferenceType.OPENAI,

# Performance settings

"max_tokens": 500, # Limit response length

"temperature": 0.3, # More deterministic

"top_p": 0.9, # Nucleus sampling

"max_connections": 20, # Parallel requests

"timeout": 10, # Fast timeout in seconds

"max_retries": 2, # Limited retries

"description": "Optimized for quick responses",

},

)

Basic Custom API Setup¶

For a simple REST API that accepts prompts and returns responses:

custom_config = client.get_or_create_llm_config(

name="My Custom Model v2",

defaults={

"llm_model_name": "custom-model-v2",

"api_key": "your-api-key",

"llm_base_url": "https://api.mycompany.com/v1",

"inference_type": InferenceType.CUSTOM_API,

"custom_api_config": {

"headers": {

"Authorization": "Bearer {{api_key}}",

"Content-Type": "application/json",

"X-Model-Version": "{{model}}",

},

"body": {"prompt": "{{prompt}}", "max_tokens": 1000, "temperature": 0.7, "stream": False},

"response_mapping": {"content_path": "data.response", "error_path": "error.message"},

},

"description": "Our production recommendation model",

},

)

Advanced Custom API with Template Variables¶

Pass template variables from your collections directly to your API:

advanced_config = client.get_or_create_llm_config(

name="Context-Aware Assistant",

defaults={

"llm_model_name": "assistant-v3",

"api_key": "secret-key",

"llm_base_url": "https://ai.internal.com",

"inference_type": InferenceType.CUSTOM_API,

"custom_api_config": {

"headers": {"X-API-Key": "{{api_key}}", "Content-Type": "application/json"},

"body": {

"query": "{{prompt}}",

"context": {

"user_id": "{{var_user_id}}", # From template variables

"session_id": "{{var_session_id}}",

"category": "{{var_category}}",

"history": "{{var_conversation_history}}",

},

"config": {"model": "{{model}}", "temperature": 0.5, "max_length": 2000},

},

"response_mapping": {"content_path": "result.text", "error_path": "status.error_message"},

},

},

)

For APIs that support multi-turn conversations, use the {{messages}} placeholder to pass the full conversation array:

multiturn_config = client.llm_configs.create(

name="Multi-Turn Assistant",

llm_model_name="chat-model",

api_key="your-api-key",

llm_base_url="https://api.example.com/chat",

inference_type="custom_api",

custom_api_config={

"headers": {

"X-API-Key": "{{api_key}}",

"Content-Type": "application/json"

},

"body": {

"conversation": {

"turns": "{{messages}}" # Full message array

},

"model": "{{model}}"

},

"response_mapping": {

"content_path": "response.text"

}

}

)

The {{messages}} placeholder contains all messages (system, user, assistant) from the conversation. When used as a pure placeholder (e.g., "{{messages}}"), it preserves the list structure in the request body. The {{prompt}} placeholder remains available for single-turn use cases.

Complex Response Parsing¶

For APIs with complex response structures, use custom response parser:

parser_config = client.get_or_create_llm_config(

name="Multi-Model Ensemble",

defaults={

"llm_model_name": "ensemble",

"api_key": "api-key",

"llm_base_url": "https://ensemble.ai/api",

"inference_type": InferenceType.CUSTOM_API,

"custom_api_config": {

"headers": {"Authorization": "{{api_key}}"},

"body": {"input": "{{prompt}}"},

"response_mapping": {"content_path": "outputs"},

},

"custom_response_parser": """

# Parse ensemble response with multiple model outputs

response_data = json.loads(raw_response)

model_outputs = response_data.get('model_responses', [])

# Combine outputs with voting

combined = ' '.join([m['text'] for m in model_outputs if m['confidence'] > 0.7])

# Set the final parsed response

parsed_response = combined if combined else model_outputs[0]['text']

""",

},

)

Monitoring and Analytics¶

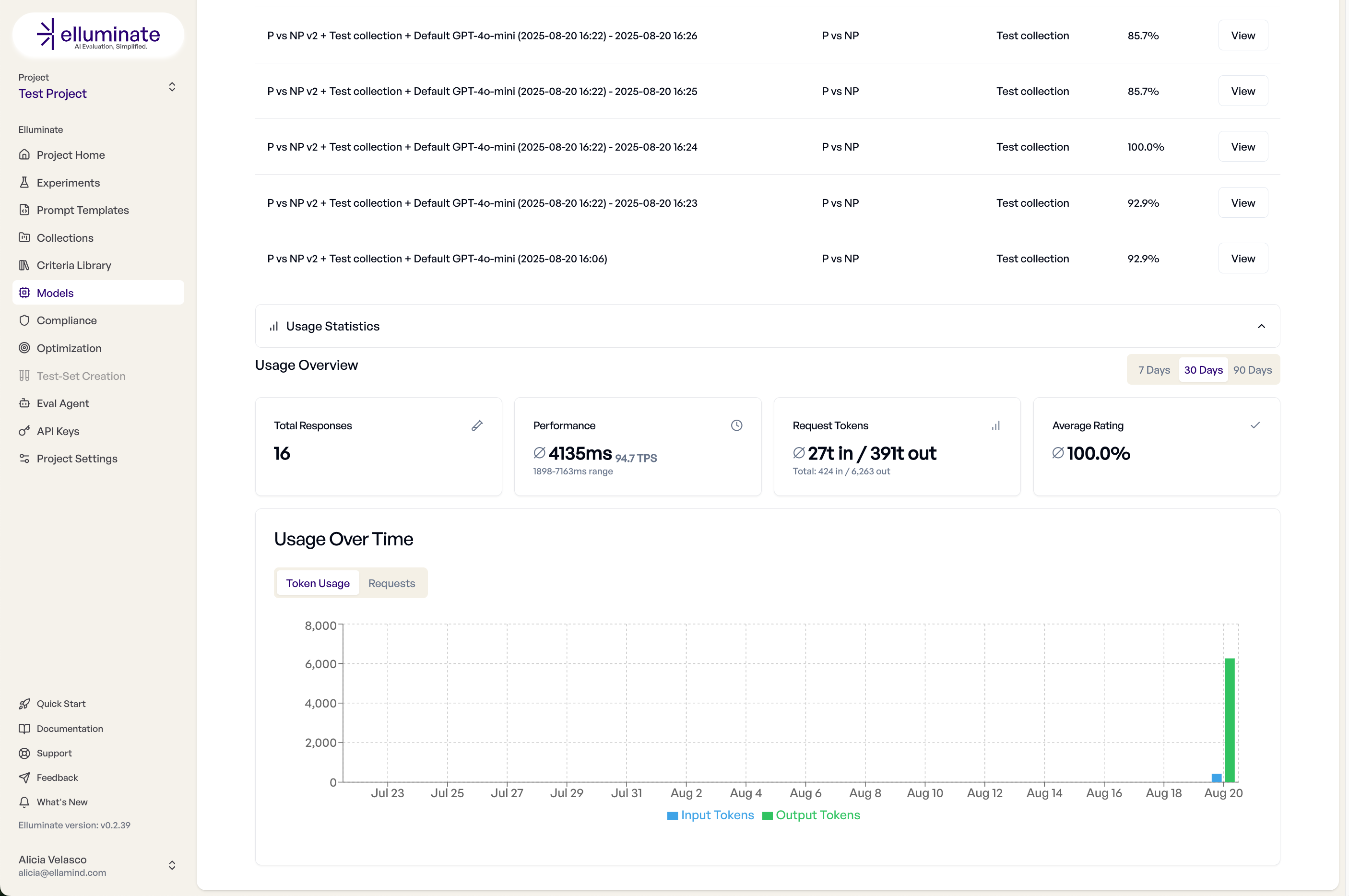

Usage Dashboard¶

To track the usage of an specific Model Configuration, you can see the experiments run as well as some performance metrics in the Configuration Details.

Best Practices¶

Configuration Strategy¶

- Development: Use cheaper, faster models

- Staging: Test with production models

- Production: Optimize parameters for your use case

- Monitoring: Set up your own endpoints for visibility

Custom API Best Practices¶

- Standardize Response Format: Use consistent JSON structure

- Include Metadata: Return model version, latency and confidence

- Error Handling: Provide clear error messages

- Rate Limiting: Implement appropriate throttling

- Monitoring: Log all requests for analysis

Troubleshooting¶

Common Issues¶

Connection Failed

- Verify API key is valid

- Check base URL format

- Ensure network connectivity

- Confirm firewall rules

Slow Responses

- Reduce max_tokens

- Lower temperature

- Check API rate limits

- Consider model size

Inconsistent Results

- Lower temperature for determinism

- Set seed parameter if available

- Use consistent system prompts

- Verify model version