The Basics¶

Master the core components of elluminate and how they work together to enable systematic evaluation of AI systems

This section introduces the core components of elluminate and how they work together to enable systematic evaluation of AI systems. If you want to see this in action, check out our Quick Start or explore our detailed concept guides below.

Projects and Templates¶

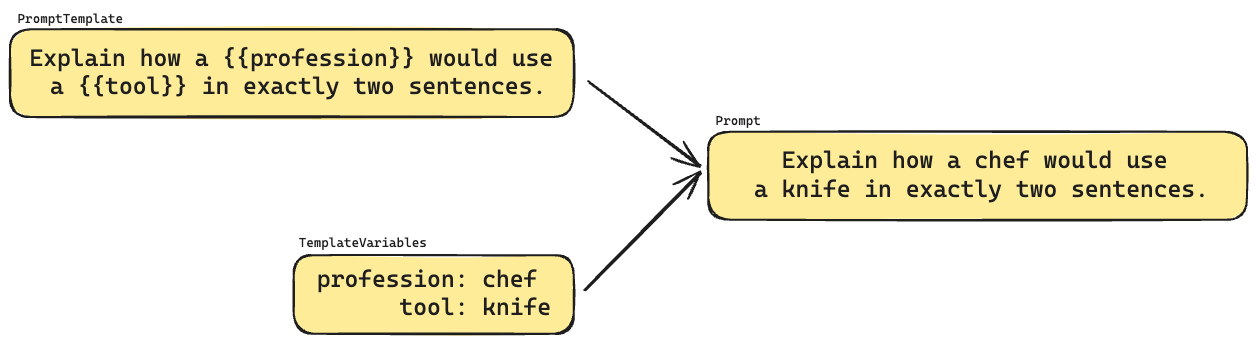

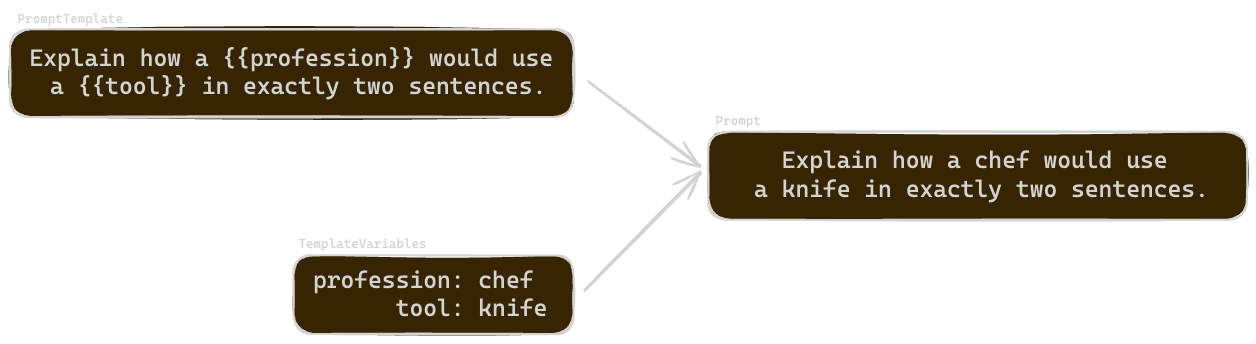

At the heart of elluminate is the concept of Projects, PromptTemplates, TemplateVariables and the resulting Prompts.

- Projects serve as the top-level container for organizing all your evaluation work. Projects can be either private or public within your organization.

- Prompt templates are reusable templates containing variables that can be replaced with specific values

- Template variables are key-value pairs that can be inserted into a prompt template

- Prompts are the result of combining a prompt template with template variables

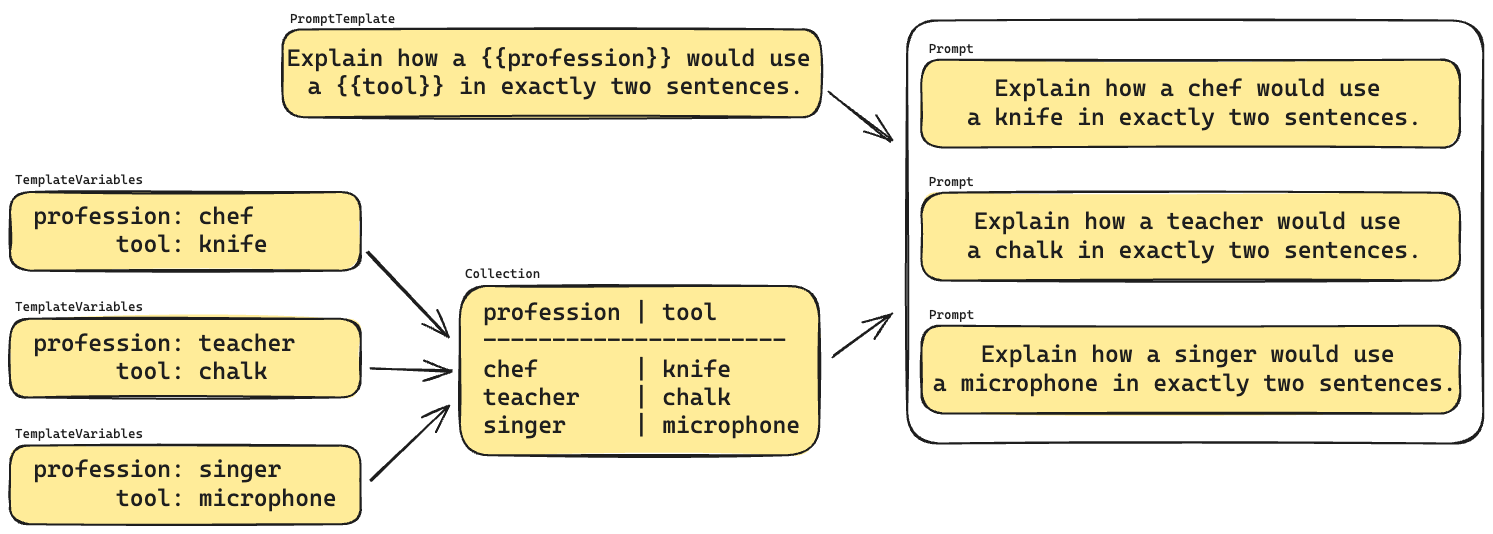

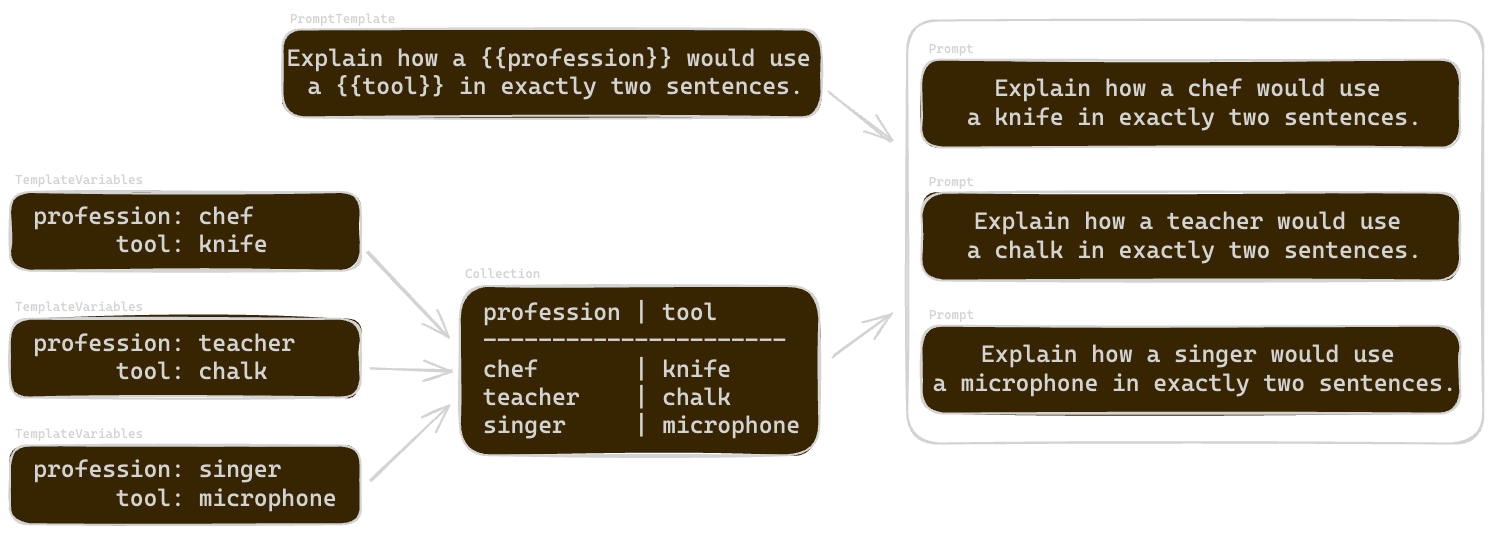

Collections¶

Template variables can be grouped into a Collection (specifically a TemplateVariablesCollection). This organizes related sets of template variables and allows you to generate multiple prompts systematically.

Collections help you:

- Organize related sets of variables

- Generate multiple prompts from a single template

- Maintain consistent test cases

- Scale evaluation across diverse scenarios

Evaluation and Analysis¶

Prompts generate responses, typically from LLMs. elluminate allows you to systematically evaluate these responses using Criterion Sets and analyze the results through Response Analysis.

Criterion Sets and Criteria¶

- Criterion Sets group related evaluation questions

- Criteria are specific binary questions that assess response quality

- Each criterion receives a "yes" or "no" rating with detailed reasoning

Experiments¶

Experiments bring everything together by combining:

- A specific prompt template

- A collection of test variables

- LLM configuration settings

- Criterion sets for evaluation

Response Analysis¶

Once responses are generated and evaluated, Response Analysis provides tools to:

- Review individual response ratings

- Identify patterns across multiple responses

- Compare performance across different configurations

- Make data-driven improvements to your AI system

Version Control¶

Versioning ensures evaluation consistency and enables change tracking:

- All components (templates, collections, criterion sets) are versioned

- Experiments reference specific versions for reproducibility

- Changes create new versions while preserving historical data

Next Steps¶

Now that you understand the basic concepts, you can:

- Explore each concept in detail using the guides in this section

- Follow our Quick Start to see these concepts in action

- Try the SDK examples for programmatic access