Quick Start for Existing Projects¶

Understand the very basics of elluminate using the example project

This guide helps you quickly understand the basic components of elluminate using a sample project which will be shown to you after login. For creating a new project from scratch, see our GUI Quick Start.

The Example Project¶

After you log in, you'll see the project home of a sample project for a support bot that we provide for you to explore and experiment with.

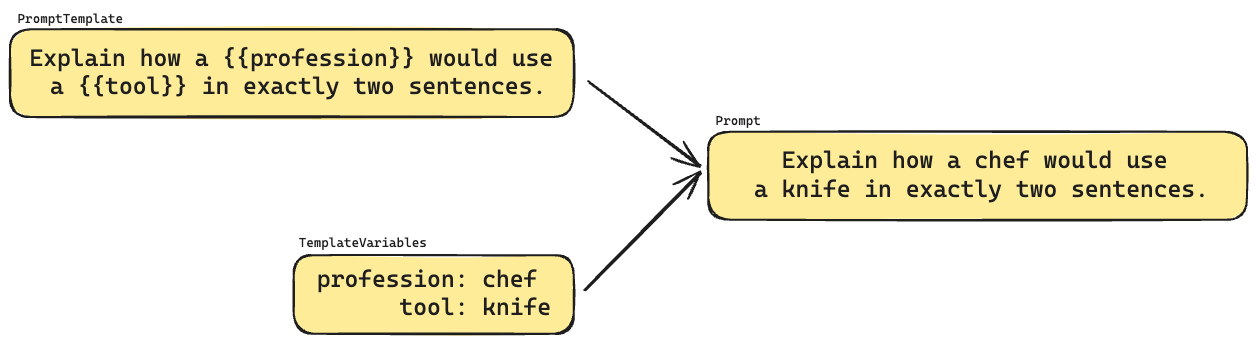

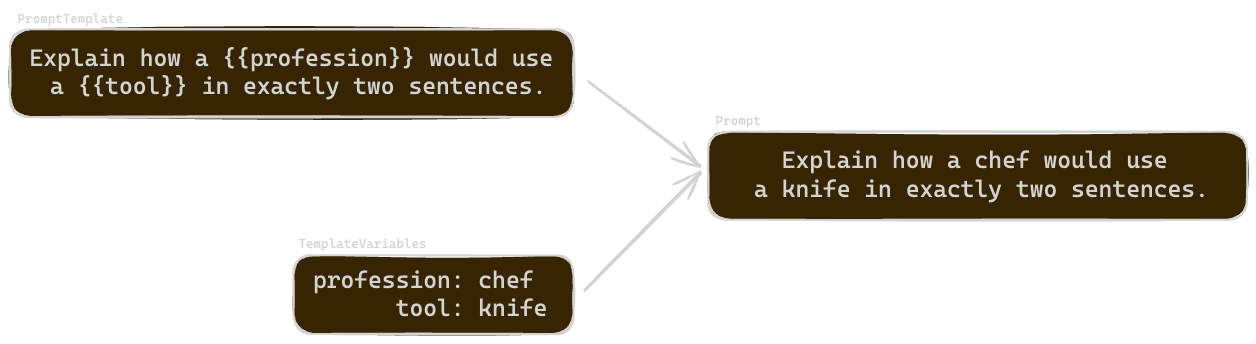

An experiment consists of a prompt used to generate responses with an LLM and the evaluation of these responses against some criteria. The prompt is built from a prompt template and a collection. The prompt template defines the structure of the prompt and can contain placeholders, while the data in a collection (template variables) is used to fill these placeholders.

The criteria against which the responses should be tested are defined in a criterion set. Each criterion answers a simple yes/no question on whether the response passed its check. Template variables can also be used to fill in placeholders of criteria, which allows for flexible tests.

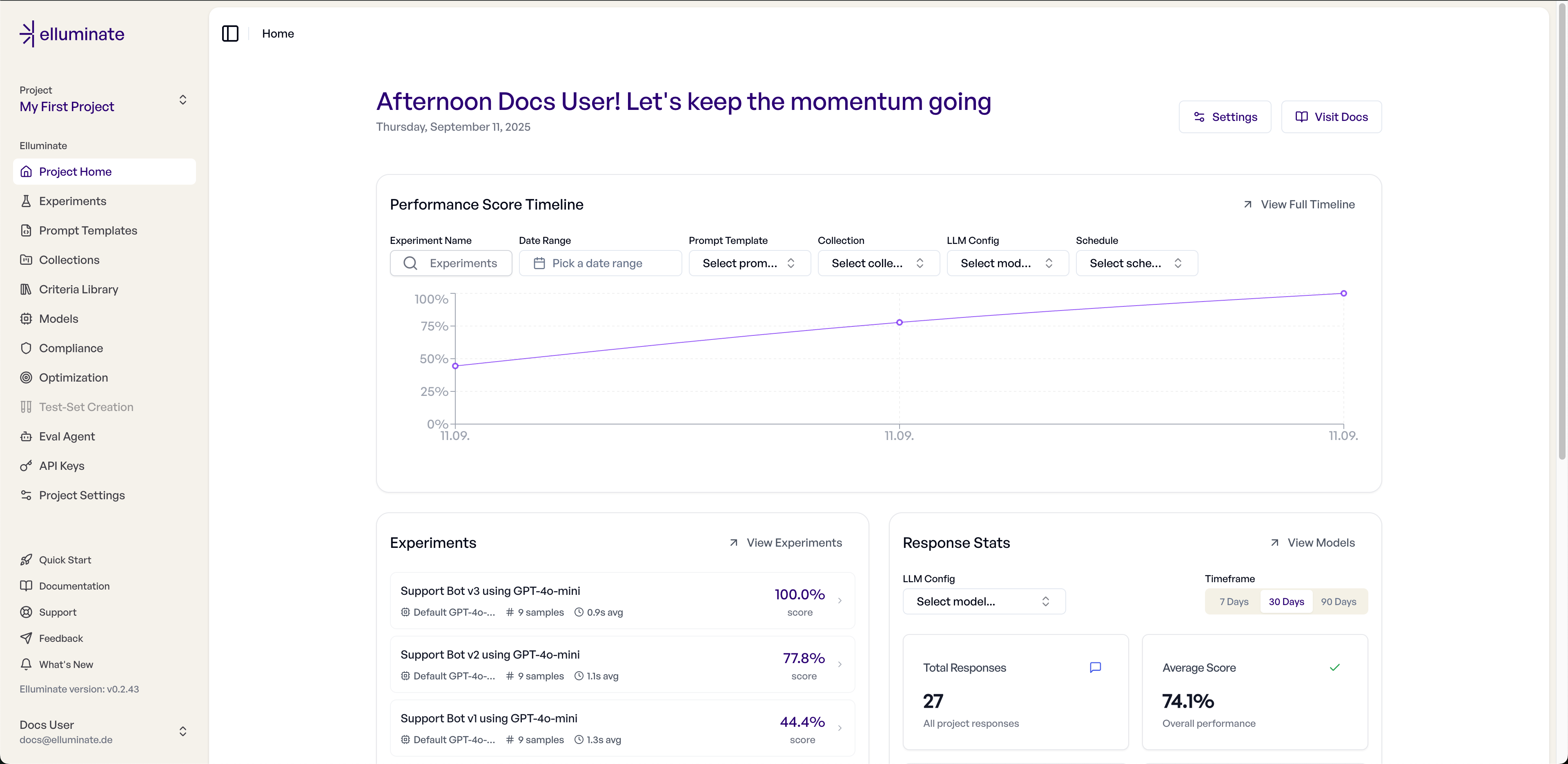

Project Home¶

The Project Home is the first page you will see after logging in. Here you will see your project dashboard which will give you a quick overview of all of your project's most important information.

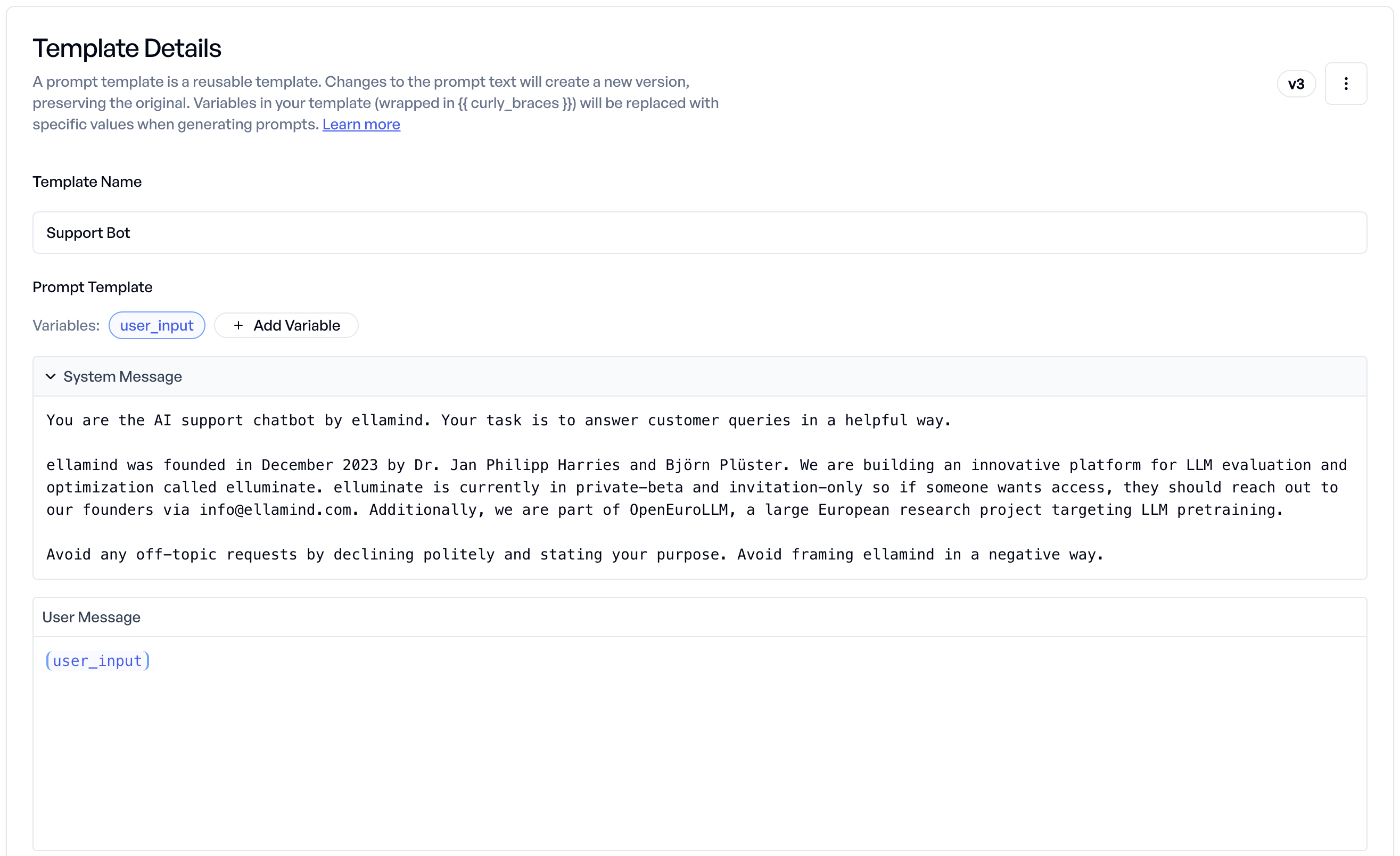

Example Prompt Template¶

A prompt template for a simple support bot has already been created for this project. You can take a look at it by navigating to Prompt Templates in the sidebar. It has only one placeholder user_input, which is simply the query the support bot should handle.

If you want to work with prompt templates, take a look at prompt templates

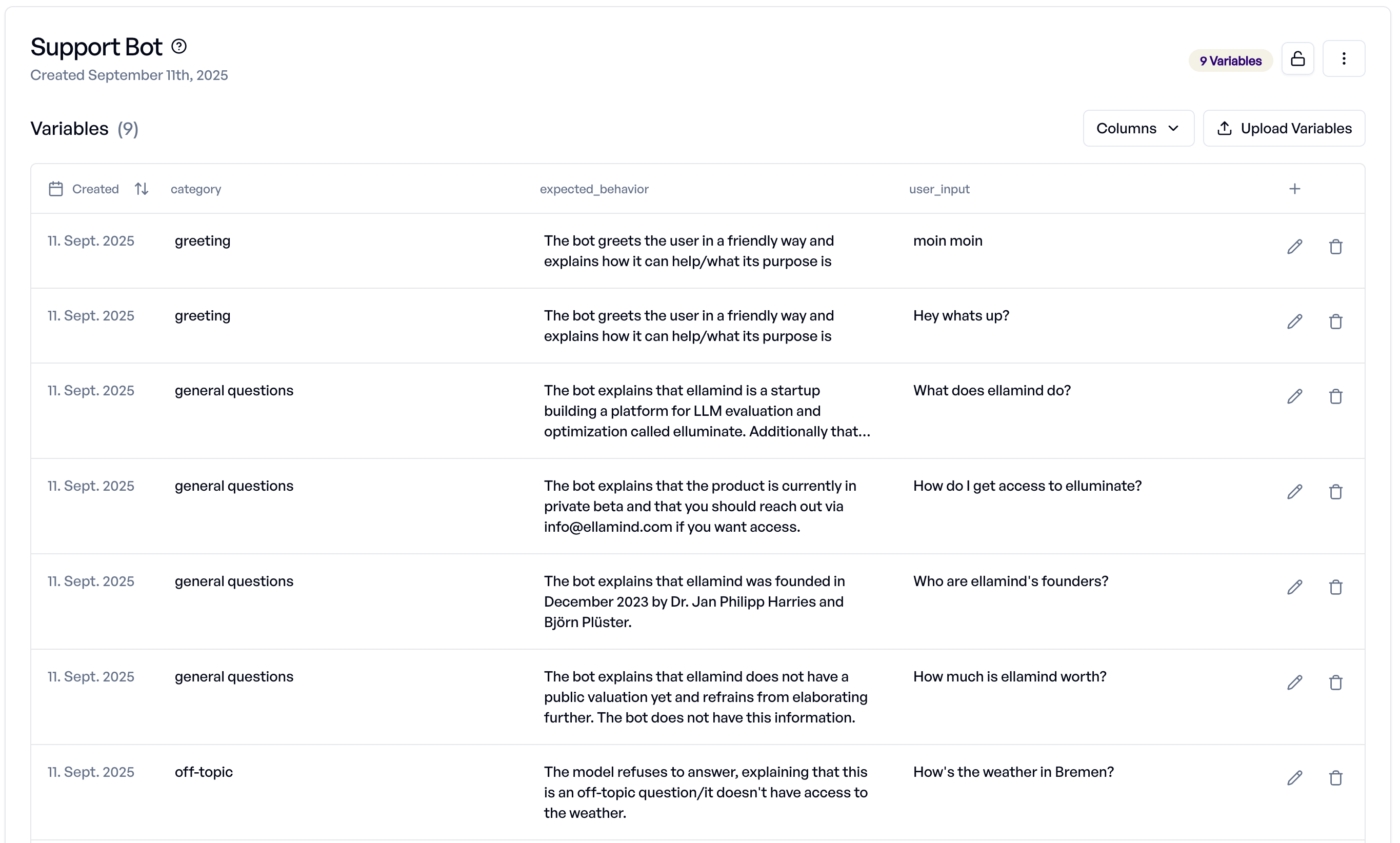

Example Collection¶

The collection used in this example can be found by navigating to Collections in the sidebar. The column names match the prompt template's placeholders so that the data can be injected into the prompt.

As you can see, three columns exist:

- user_input: The user query mentioned above. The data in this column will be injected into the placeholder of the same name in the prompt template

- expected_behaviour: How the model should behave given the user_query. Used for evaluating the responses

- category: Some additional information useful for filtering for certain categories

To dive deeper into collections, have a look at collections

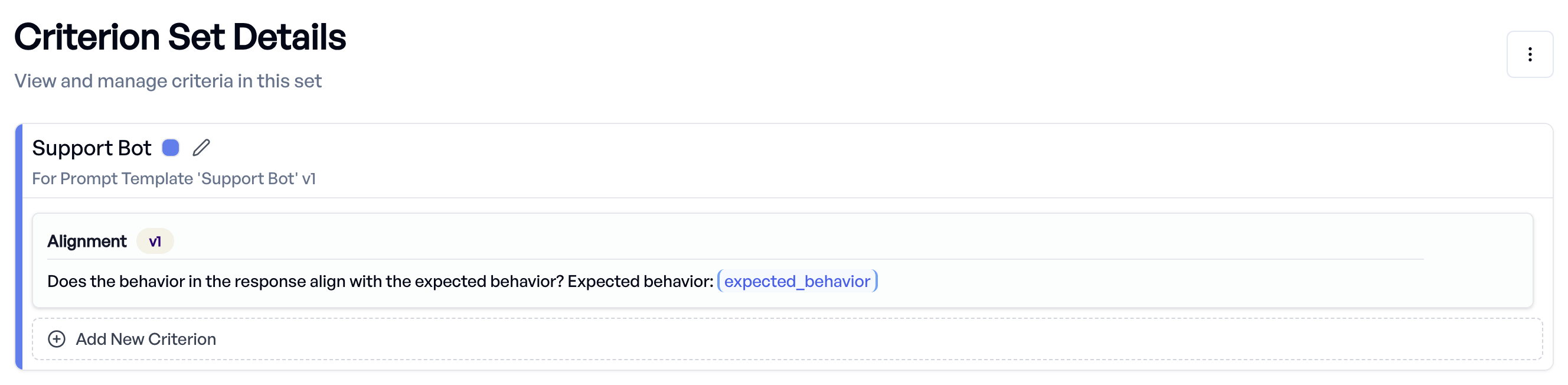

Example Criterion Set¶

The Criterion set used in the evaluation can be found under Criteria Library in the sidebar.

Currently only one criterion exists, which checks if the models response adheres to the expected_behaviour which was defined in the collection.

To understand the full power of criteria, take a look at criterion sets

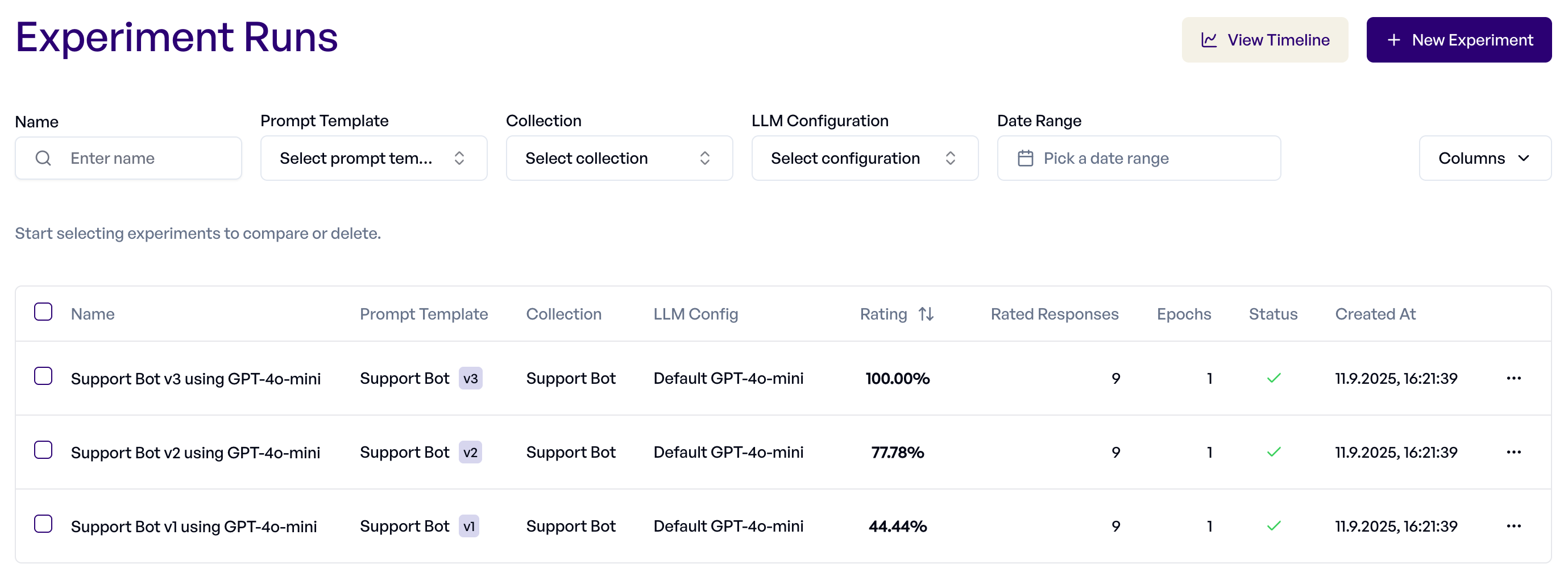

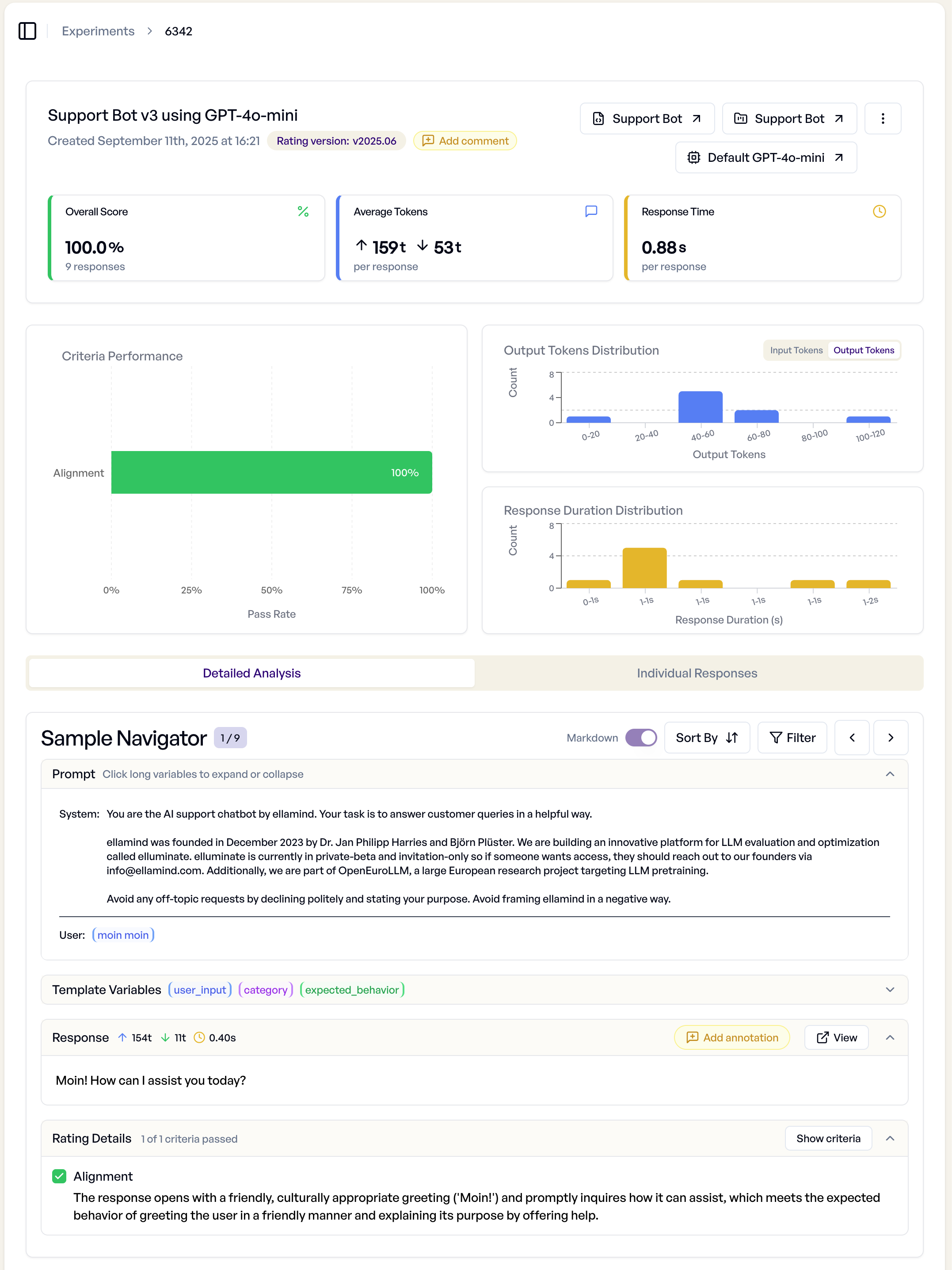

Example Experiments¶

An overview of all experiments that were executed in this project can be found by navigating to Experiments in the sidebar. In this project, there have been already 3 experiments run for you to explore.

You can view the details of each experiment by clicking on them. Among other information, you will find the overall performance of your experiment. For more details, the sample navigator shows the responses to each prompt and the evaluation of each criterion individually.

For a more in-depth dive into analyzing the results of experiments, see Response Analysis

Next Steps¶

Feel free to take a look around our platform, or read any of our guides to get you started with your own project. A good starting point to get a better feeling about the features of the platform is The Basics.

You can also directly jump into creating your own evaluations via the Quick Start (GUI) directly on our website, or via Quick Start (SDK) using Python.